Optimizing storage

It’s a cruel irony that at a time when there are more ways to monetize content than ever before, keeping digital assets accessible to production teams is only becoming more challenging. Much of it is related to providing enough storage capacity, starting right at capture. Live events now shoot with more cameras, cameras are less likely to be shut off between takes, and higher-resolution formats are filling up ingest systems faster than you can say 8K 120fps.

The heavy storage consumption continues through the workflow with transcoders spitting out more distribution formats for more connected devices, while content owners create more second-screen content for both live and on-demand markets. The result: Content owners are straining to keep rapidly growing digital assets accessible to their content creators as well as consumers. Digital libraries are growing exponentially to the petabyte level and beyond.

The all-too-common strategy is to store as much content on high-performance storage as budgets allow, and then move older content to offline tape archives as storage fills. In many facilities, unused raw footage is simply deleted after the project is complete. These are risky strategies given the new avenues for monetizing content, such as content reuse to shorten production time in new works and distribution on new platforms. Content needs to be readily available to production teams to be effective; otherwise, it’s a waste of storage space to save content that’s unlikely to ever be used.

With the right storage architecture, content owners can capitalize on enormous revenue opportunities without requiring budgets that scale at the same rate as the content. Content owners need a storage solution that provides disk-speed access from multiple locations, highly protects content from data loss, scales indefinitely and stays within slim budgets. That’s where object storage comes in.

Hitting the limits of RAID

Most disk storage systems available today are built with RAID. RAID uses checksums or mirroring to protect data and spreads the data and checksums across a group of disks referred to as a RAID array. Using the multi-terabyte disk drives now available, it’s possible to manage data sets on a single, consistent logical RAID array of four to 12 drives with a total usable capacity of about 30TB.

The growth of data, though, has outpaced the technology of disk drives. Petabyte-sized data sets either require use of disk arrays larger than 12 disks, which increases risk of data loss from hardware failure, or they require dividing data across multiple RAID arrays, which increases the cost and complexity of managing data consistency and integrity across the multiple units.

The professional video industry's #1 source for news, trends and product and tech information. Sign up below.

Long rebuild times for large RAID arrays

Larger disk sizes also lengthen the rebuild time when failures occur. The failure of a 3TB or larger drive can result in increased risk and degraded performance for 24 hours or more while the RAID array rebuilds a replacement drive.

Dual and triple parity RAID can mitigate this risk of data corruption, but adding additional parity checks is costly and reduces RAID performance. So, it’s less and less attractive as the storage scales.

Painful migrations with RAID upgrades

RAID requires disks in an array to have a consistent size and layout. Storage upgrades to denser disks typically require building a new RAID array and migrating data from old to new data volumes. These upgrades can require significant coordination and downtime.

RAID also requires all the disks to be local. Without replication, RAID offers limited protection against node-level failures, and no protection against site level disasters.

Replication increases protection, but decreases efficiency

Replication improves data integrity, recoverability and accessibility, but it reduces the usable storage space and introduces new operational complexities. With replication, files are copied to a distant secondary location for disaster recovery or to improve data access. This doubles the cost of storage and requires expensive high-bandwidth networking between the sites.

Object storage for scalability and flexibility

Where traditional storage systems organize data in a hierarchy of folders and files mapped to blocks on disk, object storage offers a fundamentally different approach by presenting a namespace of simple key and value pairs.

By using a flat namespace and abstracting the data addressing from the physical storage, object storage systems offer more flexibility in how and where data is stored and preserved. Because object storage leverages the scale-out capabilities of IP networks, this addressing allows digital data sets to scale indefinitely.

Erasure codes protect data efficiently

Object storage uses erasure code algorithms developed decades ago to ensure transmission integrity of streaming data for space communications. Where RAID slices data into a fixed number of data blocks and checksums and writes each chunk or checksum onto an independent disk in the array, erasure coding transforms data objects into a series of codes.

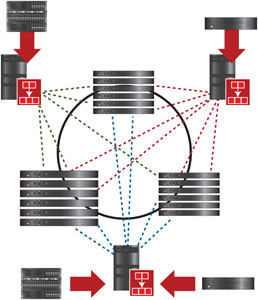

Figure 1. Erasure coding breaks data into codes and distributes them to multiple independent nodes, disks or sites.

Each code contains the equivalent of both data and checksum redundancy. The codes are then dispersed across a large pool of storage devices, which can be independent disks, independent network-attached storage nodes or any other storage medium. While each of the codes is unique, a random subset of the codes can be used to retrieve the data. (See Figure 1.)

A wider range of protection policies

Data protection with erasure codes is expressed as a durability policy, a ratio of two numbers: the minimum number of codes over which the data is dispersed, and the maximum number of codes that can be lost without losing data integrity.

For example, with a durability policy of 20/4, the object storage will encode each object into 20 unique codes and distribute those codes over 20 storage nodes, often from a pool of a hundred or more nodes. Since the object storage only requires 16 codes to decode the original object, data is still accessible after loss of four of the 20 nodes.

Easier migration to new storage technologies

Figure 2. Object storage can spread data across multiple sites for content sharing and for recovery options in case of site failure.

The erasure code algorithms that spread the data to protect it also make upgrading to new storage technologies simpler. When drives fail, the object storage redistributes the protection codes without having to replace disks and without degrading user performance. When administrators do replace drives, they can be in different nodes and of different sizes.

Easily dispersed geographically

Unlike RAID, codes can be spread across geographically dispersed sites without replication, allowing erasure coding to protect from disk, node, rack or even site failures all on the same scalable system. The algorithms can also apply different durability policies within the same object storage so that critical data can be given greater data protection without segregating it at the hardware level. (See Figure 2.)

Accessing data in object storage

Object storage relies on unique IDs to address data, relying heavily on applications to maintain mapping map between IDs and the data files that they represent. This makes data sharing across applications very difficult unless they are specifically written to share the same object ID map. Users can’t directly access data by navigating through a familiar file and folder structure as they can only access data through applications that maintain the object ID map.

Object storage with shared application object and file access

More sophisticated object storage enables sharing of the storage between both the file system-based clients and applications that are engineered specifically to use object storage. This not only allows the storage pool to be shared across architectures, but also it provides seamless integration with workflow applications such as media asset management systems that use traditional file system access to share data, with applications written specifically for HTTP-based object storage access. This guarantees the most flexible range of data use across the organization.

Object storage for digital libraries

Because object storage is scalable, secure and cost-effective, allowing content to be accessible at disk access speeds from multiple locations, it’s ideal for digital libraries and other shared content repositories.

Object storage for active archive

For online archives with disk-speed access, object storage can be integrated directly into a media asset manager or other digital library application through its HTTP-based cloud interface. Alternatively, it can be deployed with a file system layer that allows both application and direct user access through the familiar file and directory structure.

Regardless of the access method, object storage provides lower latency for more predictable restore times for workflow applications and production teams than tape archives. With quicker and more direct access, production teams can easily access digital assets to monetize content or make content available to distribution partners and subscribers.

Object storage with policy-based tiered storage

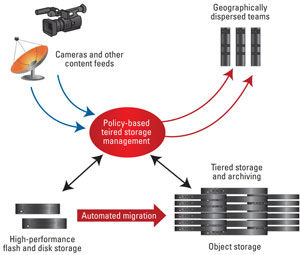

Policy-based storage strategies use automation to migrate data between tiers of storage based on the data’s performance and access requirements, from high-performance disk storage to long-term storage like object storage. Simplifying the process, policies can be defined to automate migration based on last access date of the files, file type, size or file locations. (See Figure 3.)

Figure 3. For broadcasters, object storage provides economical storage of large-scale, long-term, distributed content repositories.

Because it offers disk-speed access, object storage deployed as an active archive tier in a multi-tier storage environment can allow broadcast facilities to significantly reduce their primary storage capacities. In some cases, media workflows have been set up to successfully migrate content after 10 days since last access, with the assurance that migrated content can be quickly accessed from the active archive.

Object storage for archive on ingest

Object storage is ideal for preserving raw footage on ingest. With a policy-based storage manager, broadcasters can stream live video onto a traditional high-performance file system, with those files automatically copied to an object storage system for long-term preservation. This process can be completed while keeping the content accessible on high-performance storage — so either disk or flash.

Workflow applications such as media asset management systems can use existing capabilities — including timecode based data retrieval. At the same time, new applications can be built to directly leverage the data from the HTTP-based object storage — like a website that may provide consumers direct access to archived broadcast video. This opens new possibilities to monetize content.

Conclusion

The challenge of maintaining growing digital content libraries is one that every broadcaster is facing now or will face in the near future. There are more opportunities for monetizing content, and consumers are demanding content everywhere, anytime and on any device. This will strain current workflows and can hinder facilities’ ability to compete. The typical approach of deploying more primary online storage and archiving to slow digital tape libraries just does not scale into petabytes today and exabytes in the future. And using RAID disk for near-line storage is a short-term fix that comes with a heavy on-going cost and serious risk at petabyte-scale.

Object storage is new to broadcasters. However, it has been deployed in the communications industry for years and forms the backbone of the cloud. By combining fast near-line and secure long-term archive capabilities with geographical dispersion, it’s ideally suited to meet the needs of broadcasters both now and 20 years from now. Object storage can help you reduce your primary storage requirements today by reserving high-performance disk storage for active works in process, and it can eliminate periodic and costly content migrations to new storage technologies in the future.

—Janet Lafleur is Quantum’s senior product marketing manager for StorNext.