The classic definition of archiving has several connotations that have meaning in the context of media systems. One connotation is to place in a secure place for preservation. Another is to remove from common use and conserve for future use. Both are particularly appropriate for our industry.

Before the advent of digital video storage, archiving had a more classical meaning. Analog recordings, on film and video, were placed in a library in much the same way as books are placed on shelves with a card catalog identifying the contents of the stacks. When a library got too full, some material was removed and stored off-site in what might be properly viewed as an archive.

These analog, physical archives had limited shelf life, defined by the medium on which the content was stored. Old nitrate-based film archives, like the Fox/Movietone news library, eventually had to be transferred to other media to make continued conservation of the content physically viable. Such “archives” held enormously valuable content — preserving defining events in history as well as the artistic output of film and video professionals from the early days of the media industry. Fortunately much of broadcast and film history has been protected, though some important content has been lost along the way due to neglect and a lack of emphasis on proper conservation and archiving.

The more modern context for archiving revolves around the digital equivalent of that quite physical process. It is about preserving the “representation” of the content as, in reality, the content itself has been turned into a sequence of bits that only replicate the original experience when reassembled in a display process. This might make modern conservation easier in some ways, but it means archiving is much more ethereal and the risk of loss of content is higher. For instance, it is difficult to envision the archivist in 3003 retrieving the contents of a digital videotape library, considering the dizzying array of digital video recorders, computer disk readers, and other highly mechanical devices required to create and maintain such a library.

SMPTE and other organizations have long tried to define archival storage in the digital age. I once worked on a proposal for converting a major still image library to digital form for the purpose of preserving the content in a form that could be electronically migrated to new media in successive generations of digital archival storage. The concept is easy: Make bits now and you can forever migrate those bits to new media before the old media either physically deteriorates, or the hardware needed to play it back disappears. Any claim that digital content is inherently stable is specious. The goal has to be to move the content before the machines and operating systems are no longer available.

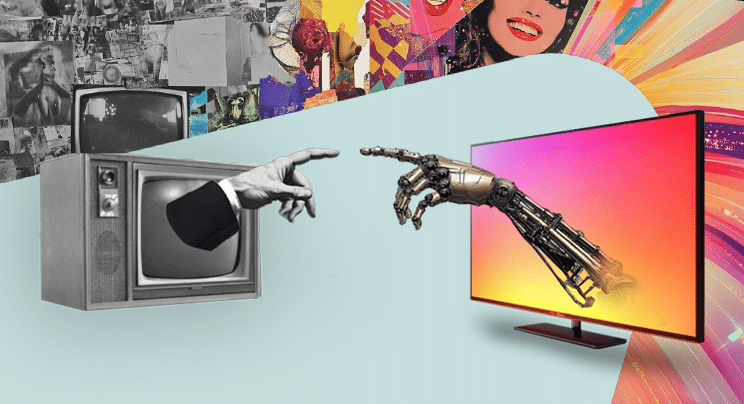

Figure 1. Storage systems usually take a hierarchical form, with media that needs to be accessed quickly held close to the point of use.

Great standards work has been done by the EBU, SMPTE, ITU and others on file formats that hold the promise of making the future interpretation of the content a much less painful process. Today, we must look at storage as inherently hierarchical, with faster-access media held close to the use or display point, and media with less time sensitivity and higher storage volume stored at any appropriate distance. (See Figure 1.)

The professional video industry's #1 source for news, trends and product and tech information. Sign up below.

As the need to access media quickly increases, it is naturally moved closer to facilitate random access at the speed required. As the need becomes less immediate it can be moved further away and the access time can be much longer. It may even be far slower than real time, since the local storage can buffer the need and make it look transparent. The key is to know what you need before you need it, which is the job of software akin to a digital librarian. Such software understands what is stored, where it is stored, and how to get it in the most efficient way.

Systems that provide these archival storage functions can vary quite a bit in type and scale. The most obvious class is robotic librarians holding digital data tapes, DVD ROMs or other digital media. Each has its strengths. In general, DVDs are slower and hold less, but are less expensive, perhaps more appropriate for smaller-scale needs at low cost. With blue laser disks coming soon, DVDs will become more effective in medium-size installations. DVD archives may, for instance, be more appropriate for a news archive, or deep archive for spots during a station installation.

Tape archives can vary from desktop- to room-sized, with large installations encompassing multiple cabinets, each with multiple drives. Having multiple drives allows simultaneous read and write functions (not always needed if traffic is low), as well as the ability to “clone” content when the physical media begins to show increasing error rates. With high-bandwidth needs — that is to say, many read or write operations potentially needed at the same time — multiple drives may be inescapable. A deep archive of critical content may contain more than one instance of each item if the content is particularly valuable. Having more than one copy of each item would also prove useful if multiple items are stored on each tape, and you might need to access two items on the same media at the same time.

In the last couple of years an intermediate class of storage systems has become important. One might think of them as near-line storage. If the disks attached to your video server network are viewed as local storage, then this additional storage would be slower access, use less expensive disks (JBOD perhaps), have the ability to transfer at lower bandwidth only, but provide cheap mass storage that can buffer deep archive effectively, providing a cost-effective way to put many terabytes online.

This strategy aligns well with the general IT industry, and is particularly suitable in our industry where media has high value and everyone wants to be able to access the maximum amount of content in the minimum time. John Watkinson once spoke at a SMPTE meeting about the perception of storage in our industry. To paraphrase his comments, it does not matter that you can put your hands on the media. What matters is that you ask for media to play back and it does. You don't care where the actual bits are drawn from, only that your request is fulfilled. Unless you must carry the media somewhere by Nike Net to be played back it doesn't even matter if the first 10 percent plays back from RAM, 70 percent plays from fast local disk, 10 percent from the near-line disk archive, and the last 10 percent from a robot 200 miles away. If the bits all arrive at the decoder in time to make a seamless presentation, then all is right with the universe, and the archive has done its job. This article was written in a fragmented file system accessed in a nonlinear manner. What mattered is that my e-mail to the publisher contained all of the content in the right order.

Though not the subject of this article, a word must be said about the “digital librarian,” or archive manager. This is a layer of middleware that sits between the physical drives (robots generally), and the “decision layer,” which might be an automation system, media asset manager (MAM), integrated newsroom software or other product. The archive manager keeps the card index and moves the content to and from the shelves. It serves many purposes, and without it the archive process could become a largely manual process. Indeed, many systems cannot work without this important piece of the puzzle.

John Luff is senior vice president of business development for AZCAR. To reach him, visitwww.azcar.com.

Send questions and comments to:john_luff@primediabusiness.com