How to take advantage of wireless opportunities

The professional video industry's #1 source for news, trends and product and tech information. Sign up below.

You are now subscribed

Your newsletter sign-up was successful

The foundation of broadcasting is wireless transmission — a fact often taken for granted. We’ve covered many aspects of broadcast transmission systems in this column, including characteristics of ATSC, DVB, ISDB and DTMB, all usually employed for high-power, wide-area broadcast. But wireless systems, at lower powers and reaching a more local footprint, are starting to encroach on the legacy broadcast trade. This month, we’ll look at some technologies that broadcasters should better understand.

White space and Wi-Fi

Broadcasters can use new wireless technologies today. With the advent of global white spaces initiatives, creative businesses are starting to develop new uses for the spectrum — and that represents an opportunity for broadcasters to expand their capacity, too.

We’re already seeing proposals for low-power devices that wirelessly transmit compressed video to receivers, using existing transmission standards like ATSC and DVB, and in a form that essentially takes low-power TV (LPTV) and scales it down in power. One such device is aimed at providing video to digital signage in shopping malls. Although using this form of transmission to blanket wide areas could be problematic from a self-interference and channel availability standpoint, it nonetheless opens up new possibilities for distributing content to a geographically targeted audience.

Wi-Fi is being used at a growing number of locations outside the consumer home. As such, it represents a spectrum resource that should be considered for content distribution. Initiatives like HbbTV suggest that there can be an interoperable link between Wi-Fi and OTA broadcast. But Wi-Fi is a shared medium, and it represents a limited resource that will break down given a sufficiently large number of users. Also, an underlying concern with Wi-Fi is always quality of service (QoS) — i.e., how reliably, and in what numbers, can users be supported? Computer simulations can help predict bandwidth, while system emulation (trial runs) can test networks already deployed.

Ideally, the various Wi-Fi standards support a data rate, to a single user over a group of channels, that runs from about 33Mb/s using 802.11b to about 216Mb/s using 802.11g, and about 1.2Gb/s using 802.11a or 802.11n. But actual throughput over a Wi-Fi radio can be less than 55 percent, due to sensing time and overhead. With a large number of users, connection “collisions” cause the throughput per user to decrease exponentially, with diminishing returns. A similar problem occurs when the connection is first established and the initial connection negotiation is set up. A Wi-Fi connection is established by a handshaking process whereby the wireless access points (WAPs) send out a beacon that the client stations pick up; this usually includes a Service Set Identification (SSID) so that the clients can select the right access point. With multiple simultaneous users connecting to a live event at a pool of WAPs, a massively parallel first connection can swamp a system.

Once a connection is established, the access point and the client station continuously send messages back and forth that control the connection; if packets are dropped due to interference, the client stations can ask for a retransmission or else ignore the dropped packets. In the first case, retransmissions will increase latency and thus result in a lower throughput, which can be offset by using higher compression of the audio/video content. In the latter case, lost packets will cause video and audio decoder errors that can be concealed or corrected to some extent. Either situation, of course, impacts the quality of the presented content.

The professional video industry's #1 source for news, trends and product and tech information. Sign up below.

One way to circumvent these issues is to use Wi-Fi broadcast, a technique being explored by some companies, where all client stations “listen” to a common broadcast. Here, the handshaking is conducted between a particular access point and the “weakest link,” so that all other listening stations get a connection that is always equal to or better than the handshaking connection. The trick here is in figuring out which client station should have that status, since one with too weak a signal would slow all the others down.

Other methods to increase the number of users include load balancing, where the network dynamically adjusts the channel assignment and priority for different WAPs, depending on usage. Because wireless users are expected to be mobile, a sophisticated scheme for handoff of multiple WAPs is required, similar to those schemes used for cellular networks.

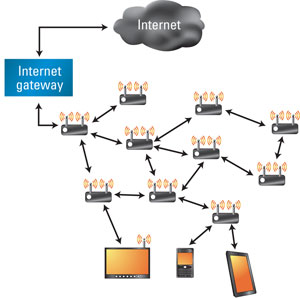

Figure 1. Mesh networks can improve throughput and deliver a higher QoS to users, but with a higher degree of complexity — and cost.

Wi-Fi networks covering an area larger than that of any one WAP can rely on various network technologies in order to provide an expected level of QoS. Typically, such networks are formed in a rather haphazard way, as a function of the local cabling constraints, and such a network will operate inefficiently.

Mesh networks, such as the one shown in Figure 1, can improve this situation. A mesh network is a local or wide area network (M-LAN or M-WAN) where each node (station or other device) is connected directly to several (or all) of the others. Mesh networks have the advantage that they can be self-configuring and self-healing; if a node breaks down or is overloaded, traffic can be re-routed to other nodes. In some applications, the nodes themselves can act as wireless repeaters, essentially an extension of the wireless hotspot functionality. The tradeoff, however, is in higher system complexity — and cost.

3G/4G, and possibly 5G

Cellular providers are now planning to deploy evolved multimedia broadcast multicast service (eMBMS), a multicast technology that mobile carriers believe can efficiently multicast video content by sending it to a large number of subscribers at the same time, essentially broadcasting using the cellular radio spectrum. The challenge to these operators is in upgrading their networks to handle the increased traffic, including the backhaul between cells and central hubs. It’s also not clear that all existing receivers can handle the new protocols, meaning consumer device upgrades could be necessary.

Cellular networks have a more fundamental dilemma: Although 4G-LTE offers up to 20 times the bandwidth of 3G networks, some analysts estimate that by 2015, when LTE networks are fully built out, mobile data traffic will have grown by the same factor, rendering them obsolete before fully deployed. This forms one oft-quoted rationale for “freeing up broadcast spectrum,” which by many accounts would only solve the problem temporarily.

In the meantime, companies are scrambling to invent new technologies that can increase bandwidth over wireless networks. Multiple-input-multiple-output (MIMO) technology uses multiple transmitting and receiving antennas, and shows promise as a bandwidth-enhancing transmission technology. It is now being studied by standardization groups on both sides of the Atlantic.

There have been announcements of new systems under development, including one with an early label of 5G, which uses an adaptive antenna array that promises to deliver from 1Gb/s to 10Gb/s of bandwidth wirelessly in the millimeter waveband. Developers hope to deploy such technologies by 2020.

But the real problem is that consumer use will always expand to fill capacity, much the same as more highways increase the number of drivers. Broadcasters are now challenged to consider new and innovative ways to reach their audience — like combinations of OTA and one-to-one networks — using all spectrum resources that are now available.

—Aldo Cugnini is a consultant in the digital television industry, and a partner in a mobile services company.