Intelligent Data Terms & Tiering

A primer on understanding the lingo

The professional video industry's #1 source for news, trends and product and tech information. Sign up below.

You are now subscribed

Your newsletter sign-up was successful

Migrating from on-prem to cloud storage can drive the inexperienced user to new sets of knowledge well outside those they would encounter when managing in-house storage. The most apparent differentiators are that in-cloud storage users pay on a per-consumption basis—usually monthly or at some incremental time-based period—and by the type of store they send their data into. Both conditions are set by the agreement established with the cloud service provider (Fig. 1). Scrutinized or scrutiny

Definition-wise, cloud storage is a service model whereby data in one or more locations is transmitted to and stored in a remote storage system. Cloud storage components are managed by the cloud storage provider. Responsibilities of the storage provider’s solution include maintenance, backup and availability to the user. The latter, availability, is often stipulated by agreement, sometimes called a contract or service level agreement (SLA).

Storage availability has two components: a time-to-retrieve (recover) data factor and a cost-to-store factor. Usually, these two are tied together. For example, if the user puts its data into deep storage and does not depend on that data for routine (daily) applications, then the cost is much lower per unit gigabyte than for sustained, readily accessible, low-latency/fast-recovery storage applications—as in editing, rendering or compositing.

VIRTUALIZING INFRASTRUCTURE

When storage is based on an infrastructure—which includes accessible interfaces, near-instant elasticity and scalability, multitenancy and metered resources—the storage is usually cloud-based and “virtualized.” Meter resources, also known as pay-per-use, are those offered with potentially unlimited storage capacity resources. Commonly found in enterprise-grade IT environments, this application has moved from a flat-fee (cost-per-month) world to a pay-for-the-use fee structure.

A familiar structure for pay-per-use is Apple’s iTunes model, the “sample for free and pay for what you want to ‘own’” (so to speak). Obviously in cloud storage you will not “own” the physical platform that holds your data, but you will pay for what you use based upon its structure, endurance/availability, its accessibility and the length of time you utilize the storage space.

LOGICAL STORAGE POOLS

A cloud service provider will manage and maintain the data that was transferred to the cloud by the user. Data is usually distributed across disparate, commodity storage systems. Data storage topologies may be on-premises, in a third-party managed data center or in a public or private cloud.

In an on-prem environment, for various reasons, data may be structured in logical pools. In such a shared environment, storage pools are capacity aggregated and formed from various physical storage resources. Pools may vary in size, yield variable but conglomerate performance (IOPS), and provide collective improvements like cohesive management and aggregate data protection. Logical pools are usually provisioned by administrators via management interfaces. A cloud infrastructure generally utilizes this logical form of storage.

The professional video industry's #1 source for news, trends and product and tech information. Sign up below.

RAW AND COOKED—LAKES AND PUDDLES

Storage pools may be equated to a data lake, although there are differences between these two derivatives. The data lake is a storage repository holding a large and vast quantity of unprocessed raw data known as source or atomic data. A giant bit-bucket where data is pushed without specific organization is a form of data lake. Processed data is, although less often, referred to as “cooked” data.

Raw data may not necessarily be called “information” since there needs to be an abstraction or applicational use, accomplished through processing, elevating its worthiness and value from raw to informational purpose. A data puddle is a single-purpose data mart built on big data technology, which is essentially an extract from a data lake.

ON-DEMAND STORAGE

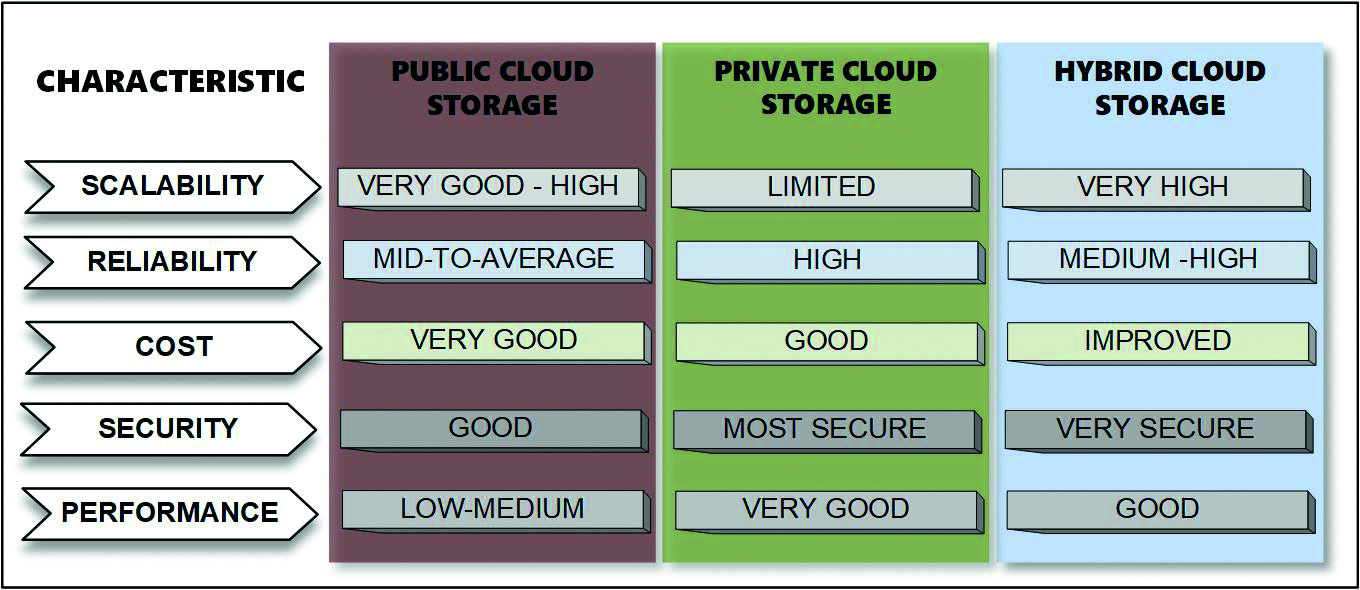

No one likes to pay for things that they don’t need or use. In the cloud, storage services are provided on demand with elastic (increasing and decreasing) capacity as needed, when needed. Opting for cloud storage eliminates the requirements to buy, manage, depreciate and maintain storage infrastructures that reside on-prem, (Fig. 2).

Cloud models have radically driven down the cost-per-gigabyte of storage. However, cloud storage providers, also known as a managed service provider (MSP), may add different sets of operating expenses vs. those in owned, on-prem storage. Added OpEx costs could make certain cloud-based technologies more expensive, depending upon whether or not the equation considers how or when the storage is used. Look for options when selecting and architecting your cloud storage solution.

THOUGHTFUL ACCESS SHUFFLING

Cloud providers concoct many useful and appropriate names for their various services, utilities and architectures. As of 2021, Azure and AWS each offer 200+ different products and services. Without naming anything specific, the concept of shuffling data storage sets to appropriately price and utilize varying locations in the cloud is a topic growing in popularity.

Optimizing storage costs, without burdening the user, employs automated methodologies that might be termed “thoughtful.” Otherwise called tiered storage, such architectures are not new, especially for ground-based (on-prem) facilities. In non-cloud datacenters, the practice of using high-performance storage for editing, compositing or rendering, where accessibility and speed is essential, is commonplace. This is known as Tier 1 storage.

A large SAN or Fibre Channel data store can be a costly proposition. Not all data workflows need this capability, so less-needed data is typically pushed to a Tier 2 (mid-level) environment. Archive data, which is seldom used or set aside for protection or redundancy (longterm) is known as Tier 3, where it may live on tape or object storage or both. Some may also use Tier 3 as that single occurrence where data is pushed to the cloud (known as deep archive) knowing it won’t require fast retrieval anytime soon.

CUTTING COSTS BY INTELLIGENCE

Early in cloud storage history, moving data around to different data containers (buckets) was accomplished manually and by specific direction of the user. While there may have been cost advantages to deeper storage, the labor effort was not advantageous to those early cloud storage users.

Things have changed over time as varying new services, increasing volumes of data and acceptance (i.e., trust) of the cloud provider by the user continually improve.

Accomplished by learning elements of data usage patterns, unattended cloud storage migration between cloud storage tiers was introduced in the 2017–18 timeframe. By automatically moving data between cloud stores, users and the cloud providers gained new advantages. With simple recognition of access periods for data, cloud providers would shift dormant storage to a “deeper” level without ever having to contact the user/owner of that data.

Users typically authorize automatic migration when signing up or establishing a particular SLA. Actions might be via user interface or APIs associated to work orders or activities. Adding intelligence to this practice has evolved over time. Intelligent tiering gives cloud service providers opportunities to expand archive tiers to other levels, which in turn improves the cost structures accordingly.

APPLICABILITY

When engaging cloud storage services, users should look into automated options and evaluate, via cloud-provided models, the value of tiered and automated storage electives. For large projects extending over months to years, one set of guidelines might be appropriate. Smaller projects utilizing shared data sets across multiple production activities may yield different answers.

Experiment with the numbers, frequencies, volumes and applicability to a particular workflow before going down any cloud storage path. Don’t let the “I’m not paying attention to this” excuse be the reason for higher storage costs, which then provide no improved results.

Karl Paulsen is chief technology officer at Diversified and a frequent contributor to TV Tech in storage, IP and cloud technologies. Contact him at kpaulsen@diversifiedus.com.

Karl Paulsen recently retired as a CTO and has regularly contributed to TV Tech on topics related to media, networking, workflow, cloud and systemization for the media and entertainment industry. He is a SMPTE Fellow with more than 50 years of engineering and managerial experience in commercial TV and radio broadcasting. For over 25 years he has written on featured topics in TV Tech magazine—penning the magazine’s “Storage and Media Technologies” and “Cloudspotter’s Journal” columns.