Haptics in ATSC 3.0: Enabling a Rich Broadcast/Broadband Media Experience

A look at the ATSC Recommended Practice on Haptics for ATSC 3.0

The professional video industry's #1 source for news, trends and product and tech information. Sign up below.

You are now subscribed

Your newsletter sign-up was successful

As ATSC 3.0 expands to the first 62 markets in the United States over the next 12 months, collectively reaching more than 75% of viewers in the U.S., it promises to move viewing broadcast content on mobile devices into the mainstream.

As part of its ongoing development of the NextGen TV standard, the ATSC recently published a Recommended Practice for adding haptics to ATSC 3.0 broadcast and broadband content on mobile devices capable of haptic feedback.

What is haptics?

Haptics is digitally created touch feedback using small vibrating motors, called “actuators,” to create touch sensations on a device or interface. The most common use of haptics is in mobile devices, where it is used to provide feedback to users interacting with the touchscreen, e.g. typing on a virtual keyboard. As a part of the ATSC 3.0, haptics brings new potential for enhanced mobile viewing experiences, for instance:

Music Broadcasts

Julio watches a broadcast concert of his favorite rock & roll band on the TV screen. A notification pops up on the TV to inform him that alternative camera views of each musician, along with a synchronized haptic track, are available through a dedicated application on his smartphone.

Julio launches the application and selects the view of the guitarist during the guitar solo. He switches to the drummer later in the song. Media content on the TV screen and the smartphone are played in-sync. Julio can feel the guitar strings and the drums in the app.

The professional video industry's #1 source for news, trends and product and tech information. Sign up below.

Customized Live Sports

Mark and his wife Jane are both huge fans of football. At the start of the live broadcast of a game, they are notified on the TV that the game is also available through a dedicated app on their smartphone with customizable haptics options. Mark and Jane both launch the app and proceed to select their haptic experiences.

Mark is a big fan of haptic-enabled game actions (clash of the offensive and defensive linemen, hits on the quarterback, sacks, tackles, interceptions, etc.). Jane likes haptics for major game events (touchdown scores, field goals, interceptions). The game on the TV screen and the mobile device synchronously play the content. Mark and Jane enjoy their personalized haptic experiences.

Haptic Advertisements

While watching the football game in the Customized Live Sports scenario above, the broadcaster runs a set of 30-second ads mid-roll. For Mark, these ads are rendered on the smartphone. Each ad unit plays a coordinated haptic track that keeps his attention during the break.

Since Jane has configured her device for “minimum” haptics, she does not receive haptic effects during the ad units, and she spends the ad break doing something else.

HAPTICS IN ATSC 3.0

These usage scenarios will be made possible by the new ATSC Recommended Practice on Haptics for ATSC 3.0, A/380:2021 (available here and referred to it as the Haptics RP in this article).

ATSC 3.0 enables users to view broadcast content on mobile devices, as the primary device with an ATSC 3.0 tuner built-in, or as a companion device wirelessly connected to the primary device such as a TV. The haptic actuator in most mobile devices can be utilized in-sync with the audio-visual content to enhance the viewing experience and create wholly new experiences, such as those described in the three scenarios above.

While we prefer haptics to be part of the ATSC 3.0 broadcast stream, it would require the standardization of haptic tracks in the DASH/ROUTE and MPEG MMT standards currently used as ATSC 3.0 transport protocols—a multi-year process that has just begun.

Until the standardization is complete, the more expedient option is to either include the haptic content as part of the event stream or retrieve a separate content-specific and device-specific haptic file from a cloud (or other) repository and play it in sync with the audio/video content in the broadcast stream. The Haptics RP describes both these alternatives in detail. It makes use of existing ATSC standards to specify the details of incorporating haptics into broadcast media streams without incurring the overhead and time of a new standard.

HAPTICS RP: HIGH-LEVEL WORKFLOW

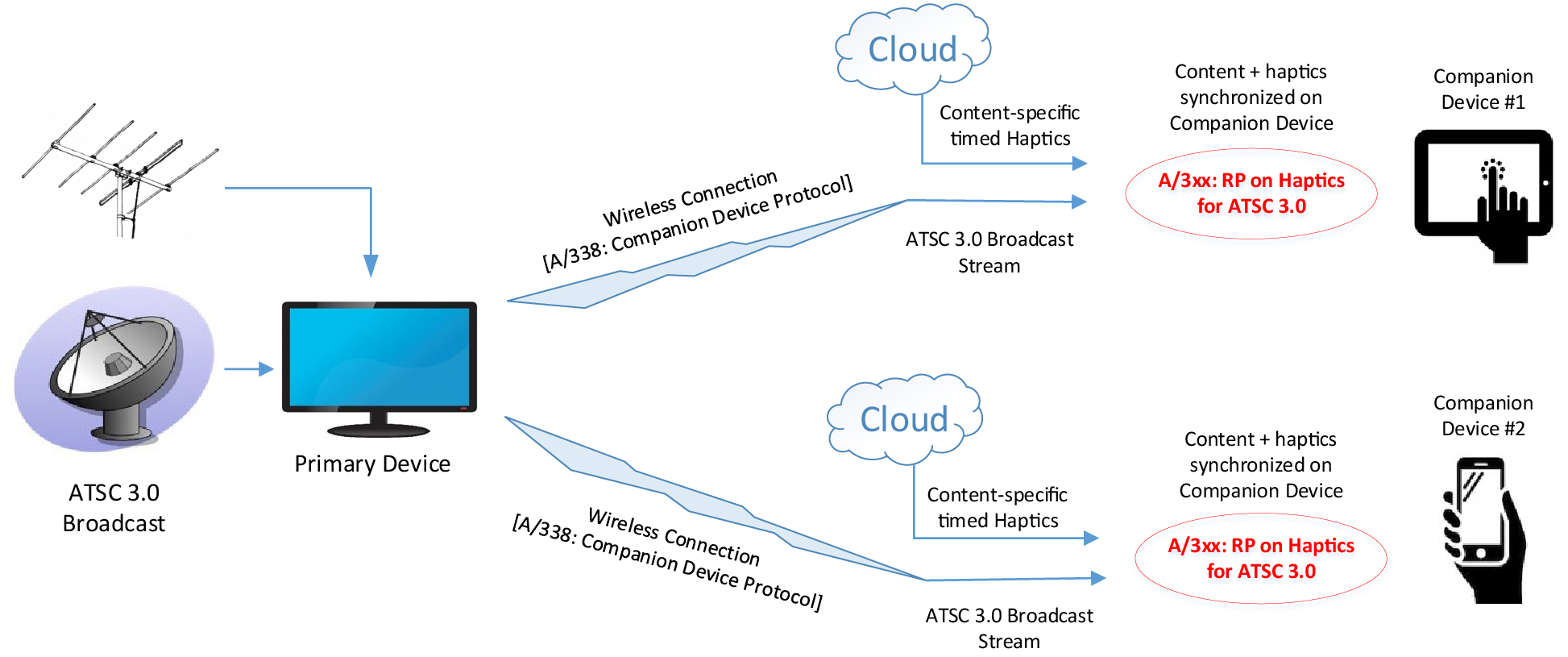

Fig. 1 below shows the workflow of haptics in ATSC 3.0 when the mobile device is the companion device (communicates wirelessly with a primary device such as a TV). The ATSC 3.0 Companion Device Protocol (A/338:2019; available here) is used to transmit the content to the companion device.

The content-specific haptic files are retrieved from the cloud repository and combined with the ATSC 3.0 broadcast stream by an application on the mobile device. The handshake details between the broadcaster and the cloud repository are required to ensure synchronous playback of the haptic track with the media stream are described in the RP.

A similar workflow applies when the mobile device is the primary device (with a built-in ATSC 3.0 tuner).

It is worth noting that in cases where the haptic track is sparse, it can be included in the ATSC 3.0 event stream itself, and retrieval of a separate haptic file from a cloud repository is not needed. If the haptic track is in a separate file, the URL and an authentication token are first retrieved from the cloud repository and inserted into the ATSC 3.0 broadcast stream.

When the stream reaches the mobile device app, it uses the URL and authentication token to retrieve the haptic track and combine it with the media stream.

HAPTICS IN LIVE BROADCASTS

As the music broadcasts and customized live sports scenarios above illustrate, being able to feel the action, in addition to watching and listening to it, distinctively draws in viewers. For live sports events, the output from multiple sensors on the playfield (or on the players’ attire) is part of the “Sensor Feed” uploaded to the cloud repository and used to generate the haptic events that become part of the haptic content.

The number and types of sensors used to generate these live haptic events and the Sensor Feed format may be proprietary to each broadcaster. As such, the mechanism used for uploading the Sensor Feed to the cloud repository is outside the RP’s scope.

CUSTOMIZING HAPTIC EXPERIENCES ON MOBILE DEVICES

In the customized live sports scenario above, Mark’s selection represents the “maximum haptics” user preference, specified in the RP, while Jane’s selection represents the “minimum haptics” user preference value in the RP.

Not all mobile devices have the same quality or number of actuators; most have a single SD (standard definition) actuator, while many newer devices have one or more HD (high definition) actuators. The RP describes how the user preferences and actuator type are included in the customization parameters list to ensure that the downloaded haptic file can be played properly on the device.

HAPTICS-ENABLED ADVERTISEMENTS ON MOBILE DEVICES

Mobile ads are an excellent way to reach the coveted demographics that spend a lot of time on their mobile devices. Given that ATSC 3.0 broadcasts are designed to be consumed on mobile devices, mobile ads continue to be significant revenue sources for ATSC 3.0 broadcasters. The Haptics RP describes how haptic video ads can be inserted into the ATSC 3.0 broadcast stream.

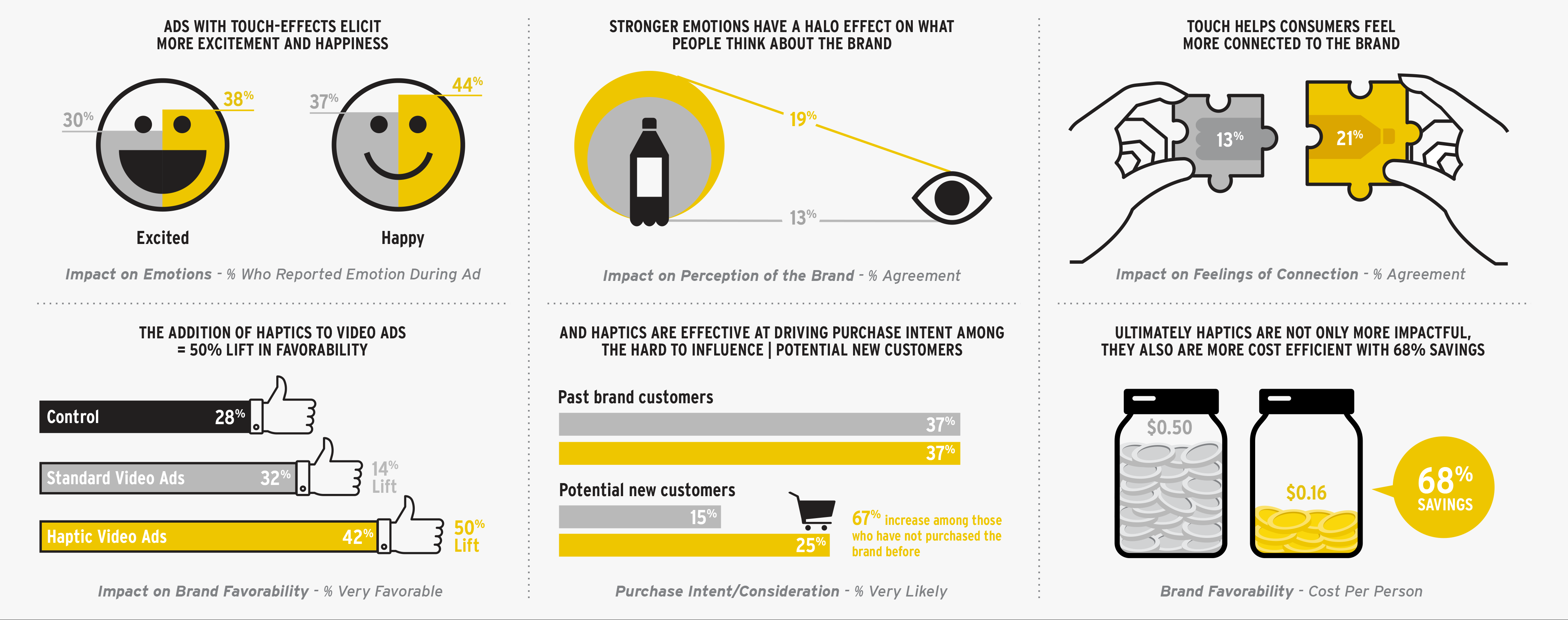

Haptics-enabled mobile advertisements elicit excitement and happiness, evoke stronger positive emotions in people, improve brand favorability and most importantly, effectively drive purchase intent among the hard-to-influence and new customers.

The infographics in Fig. 2 summarize the results of a 2016 study conducted by MAGNA, IPG Media Labs and Immersion Corp. to quantify the impact of touch-enabled mobile advertising. Testing was conducted using 1,137 people and a range of brands: BMW, Royal Caribbean, Truvia and Arby’s. In each of the criteria studied, the results are compelling: Viewers prefer haptic video ads by a statistically significant margin.

NEXT STEPS

Haptics provide an additional layer of entertainment and sensory immersion that goes beyond traditional viewing. As ATSC 3.0 is rolled out in various markets in the U.S. and worldwide, the demand for leveraging the full capabilities of ATSC 3.0 will only increase. Immersion is looking forward to working with broadcasters to integrate the Haptics RP and enable mobile devices to take advantage of the haptic-enabled content stream.

Yeshwant Muthusamy, Ph.D., is senior director, standards for Immersion Corp.