The Impact of AI/ML on TV Production and Playout

Turning big data into real-time actionable analytics

OTTAWA—Trend alert: Artificial intelligence/machine learning (AI/ML) is becoming an integral part of the total TV production/playout process.

“AI/ML is shifting to provide tremendous value to broadcasters and content producers,” said Amro Shihadah, IdenTV’s Co-Founder & Chief Operating Officer for IdenTV, a McLean, Va.-based real time video analysis market researcher. “AI/ML is achieving this by transforming big data from a cost center and opaque set of structured/unstructured datasets into real-time actionable analytics and tools for big data search and recall, creating a better user experience, and generating revenue from new content distribution channels.”

Broadcast consultant Gary Olson, who has just released the second version of his book, “Planning and Designing the IP Broadcast Facility—A New Puzzle To Solve,’’ says the technology is already showing up in elements of the production chain and is expected to expand its footprint.

“I see AI/ML appearing in editing, graphics and media management products in 2020,” Olson said. As the year progresses, “it will be interesting to see which vendors will claim their products have AI or ML.”

CONTENT DISCOVERY

Many major broadcasters and TV studios have vast libraries ripe for direct-to-consumer online sales. The challenge lies in determining which of these programs will appeal to modern consumers and for what reasons, without using employees to watch all of them in real-time.

Prime Focus Technologies’ CLEAR Vision Cloud has a cloud-based AI engine that can do this work across a number of search variables, and in “record time,” according to the company.

“There could be one AI engine that looks at identifying faces in the video,” said Muralidhar Sridhar, vice president of AI and Machine Learning for PFT. “Another one may look at signature sounds of, ‘let’s say, a person splashing through water,’ while a third searches for distinct objects. Best yet, what would take humans hours to achieve looking at a piece of content can be done by our AI in real time.”

The professional video industry's #1 source for news, trends and product and tech information. Sign up below.

Primestream’s Xchange platform uses AI/ML to power its content discovery tools, providing a wide range of search options in the process, according to Alan Dabul, director of product development for Primestream.

“You can narrow the search down not just to President Trump, but to those specific clips where he is talking about taxes,” he said. “You can then narrow the search further to those times when he is speaking about taxes in an office setting, and then see who is with the president in the shot at that time.”

SPORTS AND LIVE EVENTS

Sports and other live events are among the most labor-intensive productions for broadcasters, given how much content has to be created on the fly. Tedial’s SMARTLIVE metadata engine uses AI/ML to automate media management tasks associated with these productions; including metadata tagging, automatic clip creation and distribution during live events to digital platforms and social media. SMARTLIVE can also manage multivenue feeds and support multiple, instantaneous content searches to integrate archival footage into live broadcasts.

“SMARTLIVE allows the production team to create more content leading to increased fan engagement and additional revenue, using the same budget and with the same team,” said Jerome Wauthoz, vice president of products for Tedial. “SMARTLIVE also connects directly to existing production environments so our customers can use their current infrastructure to ingest, edit and deliver content; no additional investment is necessary.”

CAPTIONING AND TRANSLATIONS

Another labor-intensive area where AI/ML is gaining traction is multilingual captioning. Using speech-to-test AI systems, vendors can automatically generate text captions from the content’s audio, and provision them in a range of languages within the same data stream.

“The algorithms are trained to learn from data in real-time, absorbing local terms and dialects for the optimal captioning experience,” said Brandon Sullivan, senior offering manager for IBM Watson Media. “As AI and machine learning training capabilities improve, local dialects, places and specific names, as well as the voices of individual speakers, will all be accurately captured. Down the road, this will not only transform closed captioning but also automated translation, video indexing, and more.”

Captioning and lip sync are two of the AI/ML technologies featured as part of Interra Systems’ BATON, a video QC platform. “With AI/ML, you can improve the accuracy and speed of captioning, which is a resource-intensive, time-consuming process,” said Anupama Anantharaman, vice president of product management for the Silicon Valley-based provider of video QC and monitoring technology. “It is also particularly effective at detecting ‘lip sync’; the alignment between the movement of lips onscreen and what is being said.”

Telestream’s Telestream Cloud includes captioning as its many cloud-based AI/ML-enabled offerings; the others being video transcoding for multiple delivery platforms and quality/compliance checks, according to Remi Fourreau, cloud product manager for the company.

“We use the speech-to-text capabilities of many cloud-based providers to generate accurate captions and subtitles in many languages,” Fourreau said. “This is an area where AI/ML really shines in doing the task accurately and efficiently.”

ENCO’s enCaption4 platform provides automated closed captioning for live and pre-recorded TV content in real-time, and combines AI-driven machine learning with a neural-network speech-to-text engine. In addition to newsroom rundown imports that teach unique words via AI, enCaption4 can be taught special words such as host and cast names, and local and regional terms. Other AI-driven enhancements improve the captioning of punctuation and capitalization.

“enCaption can accurately spell unusual words learned from ingested lists and scripts, and without creating speech pattern profiles for every speaker, said Ken Frommert, president of ENCO. “This is an important benefit for news operations automating and captioning speech from various anchors, reporters, meteorologists, and studio guests.”

COMPRESSION

Video compression has always been a balance between data rate reduction and video quality. Through AI- and ML-based cloud solutions such as its VOS360 Live Streaming Platform, Harmonic aims to strike this balance more effectively.

“Our PURE Compression Engine uses AI/ML to improve the algorithms that manage video compression,” said Jean-Louis Diascorn, senior product marketing manager, who leads Harmonic’s AI/ML for video compression advances. “These improvements are achieved far quicker using AI/ML compared with using human engineers. We continue to make progress on the work that we presented at last year’s NAB BEITC and are now aiming to address the density aspect.”

RECOMMENDATION ENGINES

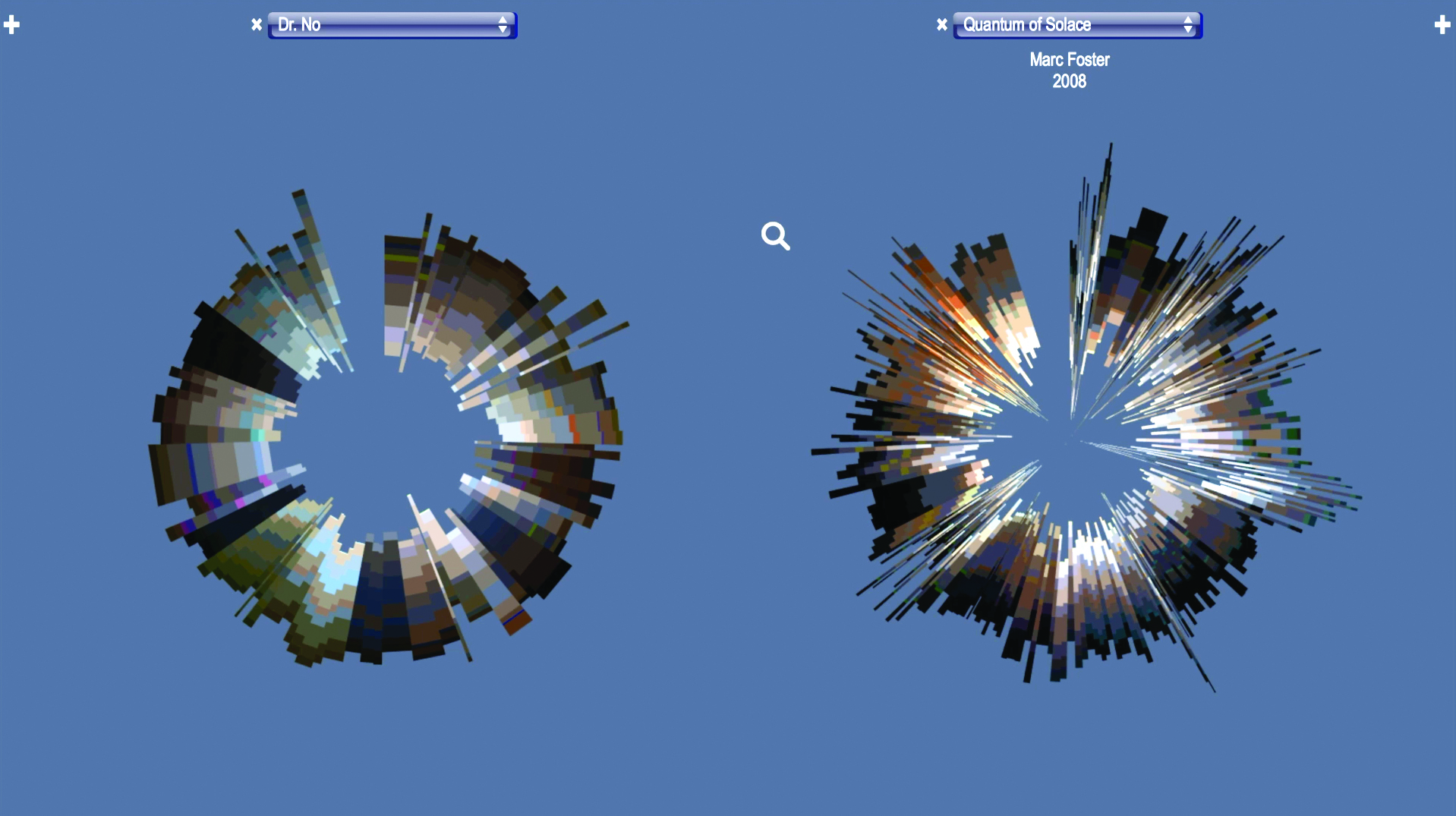

Streaming services such as Amazon, Netflix and YouTube use AI/ML-enabled recommendation engines to mine their viewers’ current content choices, and use what they find to recommend similar programs that might be of interest. Vionlabs’ AI/ML-enabled Content Discovery Platform is designed to help broadcasters assess their own content libraries, to focus and enhance their Direct-to-Consumer sales online.

“High-quality data can help broadcasters understand so much more about their content and make better informed decisions throughout the content cycle,” said Marcus Bergström, CEO of the Swedish-based provider of video discovery technology. “One example of this is in content recommendations and providing broadcasters with a deeper understanding of how successful shows appeal to viewers. It could also help them automatically comply with regulations for post-watershed content.”

Last month, the company launched “Emotional Fingerprint API” to help media companies make better decisions based on AI-generated video data and insights. Emotional Fingerprint API uses computer vision and machine learning to generate sentiment-data, creating a unique personal viewer experience based on Vionlabs’ recommendation, according to the company.

Emotional Fingerprint API has been developed to measure thousands of factors during the screening of a video, including colors, pace, audio and object recognition, in order to produce an AI-derived fingerprint, frame by frame, that represents the emotional structure of content.

THERE ARE LIMITS

AI/ML-enabled systems are now fulfilling many roles in the TV production/playout stream. But they can’t do everything; at least not yet.

“For machine learning tools to work effectively, you need to continuously fine tune models and need large amounts of well-prepared data,” said Anantharaman. “There will be challenging situations where human intervention will be needed. Yet, for the majority of content, AI/ML can provide an extremely high level of accuracy.”

James Careless is an award-winning journalist who has written for TV Technology since the 1990s. He has covered HDTV from the days of the six competing HDTV formats that led to the 1993 Grand Alliance, and onwards through ATSC 3.0 and OTT. He also writes for Radio World, along with other publications in aerospace, defense, public safety, streaming media, plus the amusement park industry for something different.