Streaming video

Today, content producers no longer have a captive audience of local TV screens. Their content is likely to be viewed by anyone with an Internet (or even cellular) connection. The content owners that use these resources will wisely open up their audience to a truly global reach.

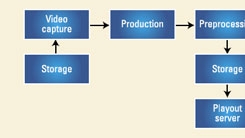

Providing streaming video to a user requires several unique elements, including the use of special formats, compression and associated metadata. Ideally, the studio asset management system will do most of this automatically. (See Figure 1.)

First, video material is selected or queued for streaming. If the segment is archived on tape, a tape-to-file capture must be made. An edit decision list is assembled that essentially sets the in and out points for a segment. Furthermore, ads and promos are added. One major difference from nonlinear editing is that these bumpers can be added as an actual video edit or as a playlist item that is retrieved at streamout time. The latter is more frequently used and has the advantage of saving production time and storage space.

The desired video material must be repurposed for the target device, be it a computer or a mobile device such as a cell phone. Next, a change in resolution is needed (format conversion), and the material must be encoded (compressed) to meet the bandwidth constraints of Internet service providers or mobile service. Given the typically smaller display size of these devices, video noise reduction should also be applied to maximize the efficiency of the encoding.

Types of packaging and video codecs

Many of the file formats used today are actually multimedia containers that multiplex the various video, audio and data components into one package or file. (See Figure 2.) The file wrapper does not always uniquely define the type of video coding that is used. Thus, AVI, ASF, FLV, MOV, MPEG-2 Systems, MP4 (MPEG-4 multimedia), MXF and 3GP (for mobile phones) all define the format of the container (or transport layer) that, in turn, includes the compressed audio and video essence, and other data. Note the distinction between data and metadata. Data could include subtitles or other text or information. Metadata is information about the file itself, such as a description of the content, the author, the copyright holder, and archiving or indexing keywords.

The producer's choice of file and video format is a function of compression quality and efficiency, product support at the user's side, and possible licensing terms for the encoders. Each of the following streaming video technologies combine compression, file formats (containers) and streaming protocols. Many of the codec providers claim their codec exceeds the performance of the others. In reality, comparisons are exceedingly difficult, as there are many different encoding parameters that can be used, resulting in varying degrees of playback performance.

The professional video industry's #1 source for news, trends and product and tech information. Sign up below.

Some of the most common formats are:

- ClipstreamDestiny Software's streaming technology uses streaming video encoder and server technology on standard Web servers. As such, a transport protocol (e.g. UDP) is unnecessary. The player is implemented by a small Java applet running on the viewer's device — meaning that the service can also run on a Java-enabled cell phone.

- FlashIt uses On2 Technologies' proprietary Truemotion VP6 video codec. On2 (originally known as The Duck Corporation) claims that VP6 offers better image quality and faster decoding performance than Windows Media 9, Real 9, H.264 and QuickTime MPEG-4. VP6 is based on traditional spatial, temporal and entropy coding techniques, including discrete cosine transform (DCT) and motion compensation, with extended (long range) motion vectors and quarter-pel motion estimation. The On2 VP6 Simple Profile encoding is said to play back HD resolutions on a 2.5GHz Pentium-4 PC and 3/4 HDTV on a slower 405MHz platform. VP6 is also used in the On2 Flix Live application, which enables encoding of live video feeds.

- QuickTimeApple's file format functions as a multimedia container file that stores audio, video, effects or text. QuickTime 7 is compliant with MPEG-4 H.264/MPEG-4 AVC and the 3GPP standard for third-generation high-speed wireless networks. The decoder supports Baseline, Extended and parts of Main Profile. QuickTime Streaming Server enables delivery of live or prerecorded content in real time over the Internet.

- RealVideoRealNetworks based the format on H.263. However, it is now a proprietary video codec. RealVideo is streamed using the proprietary protocol Real Data Transport (RDT). The connection, however, is set up and managed using Real Time Streaming Protocol (RTSP). While RealVideo can use both constant and variable bit-rate encoding, the latter is generally unusable over streaming networks, as the available channel capacity is not dynamically known.

- SHOUTcastNullsoft recently implemented video streaming using the Nullsoft Streaming Video (NSV) format that encompasses the VP3 codec developed by On2. The codec is now in the public domain. It is similar in quality and bit rate to MPEG-1.

- Windows Media VideoCarried within the ASF container format, Microsoft's now-proprietary WMV codec has been standardized as SMPTE-421, also called VC-1. Having evolved from MPEG-4 AVC, VC-1 now employs an adaptive block-size transform and a modified deblocking filter that reduces artifacts in areas of high detail. VC-1 also has a special mode for handling interlaced video.

Streaming protocols make it happen

In order to stream audio and video over the Internet, various stream and transport protocols have been developed. The first of these was User Datagram Protocol (UDP), which sent the data in a series of small packets. The problem with UDP is that errors must be corrected, concealed or tolerated; there is no possibility of retransmitting lost data. As the Internet is a variable-bandwidth medium, with no guarantee of packet arrival, UDP cannot be used if reliable video transmission is desired.

The existing TCP/IP suite, with core protocols being the Transaction Control Protocol and the Internet Protocol, is already mature from years of Internet service. It guarantees reliable and in-order delivery of data from server to client. However, it does so by means of a series of timeouts and retransmissions, which renders streamed audio and video choppy when errors are encountered.

Developed later, the Real-time Transport Protocol (RTP) and Real-time Transport Control Protocol (RTCP), which both run on top of UDP, address these issues. RTP defines a standardized packet format for the audio and video data, and RTCP allows quality-of-service information to be sent back to the originating server. RTCP can thus send information on lost packets back to the server, which in turn can modify the encoding or streaming process. RTSP was then added, allowing the user (client) to control remotely a stream by means of VCR-like controls.

Adobe, Microsoft and RealNetworks have proprietary protocols — Real Time Messaging Protocol (RTMP), Microsoft Media Services (MMS) and RDT to stream video using Flash, Windows Media (earlier versions) and RealVideo, respectively.

Video can be streamed over unicast or multicast connections, essentially one-to-one (on-demand) vs. broadcast. Unicast connections require large server horsepower and connection bandwidth, as the stream is duplicated for each client. The most efficient broadcast is IP multicast, where the source sends each packet only once, and intermediate network nodes have the duty of replicating packets as needed. However, this means that all nodes must support the protocol, a situation that is not currently in place. (Peer-to-peer protocols have also been developed by various entities, but these all share the problem of rampant copyright abuse.)

Content mastering

Video (and audio) that will be streamed is often derived from content that was originally targeted for another use, such as broadcast. In order to make for the best presentation on PCs or other devices, the content must be repurposed for the specific use.

An extreme example of this is streaming to a cell phone, where the screen is often not more than an inch in size. A simple downconversion of the scanning format (resolution) can often result in illegible graphics and unsatisfactory content. Production tools and services are available that can greatly improve the appearance by intelligently cropping talking heads — even automatically — to allow for a better presentation. Graphics can also be recreated to display better on a smaller screen, and audio may require reprocessing as well. For efficient workflow, repurposing can be done in parallel with the original content production.

Summary

Streamed content is becoming every bit as important as conventionally broadcast programming. With digital storage of program assets, it is relatively straightforward to develop an infrastructure that makes the most use of content by repurposing for streaming applications.

Aldo Cugnini is a consultant in the digital television industry.

Send questions and comments to:aldo.cugnini@penton.com