Managing Storage System Overhead

The professional video industry's #1 source for news, trends and product and tech information. Sign up below.

You are now subscribed

Your newsletter sign-up was successful

For the casual user, knowing the total versus usable storage capacity of their system is not usually of concern. Furthermore, one usually finds that bandwidth or performance tweaks are seldom required once a system is fully configured, especially in a closed system. However, during the design process, as storage, IT and server components are selected, many factors will be addressed that in turn will set the total “cost-to-performance” value of a system.

System overhead is just one of a collection of factors that must be assessed during design.Each subsystem inherently adds a degree of overhead that contributes to the overall performance equation. Overhead effects are applicable to disk arrays, compression formats, file transfer time periods and user factors including, e.g., the number of concurrent streams, number of transcoding operations per unit of time, and the aggregate number of services to be handled at peak and idle periods.

MAXIMUM PERFORMANCE AT MINIMUM COST

Videoserver, editorial and MAM systems, and storage providers are keenly aware of what their systems can achieve under various service models. When once these systems were provided by a single-vendor solution provider, the burden of performance was controlled by what was essentially a “closed” or “proprietary” system. With the emergence of modern workflows, overall system capacity, control and performance continues to be challenged as users strive to achieve maximum performance with minimum cost.

Today, users often must rely on third party products that become secondary toolsets in order to reach a complete solution. These ancillary components attempt to fill in the gaps between issues of compression codecs, storage bandwidth or capacity requirements, functionality and more. While some solution providers will achieve the 90th percentile in “features and functions,” end users may still find they need additional functionality that the primary solution provider cannot achieve. This where selecting the right combinations will make all the difference in performance, features and user functionality—a topic well outside the boundaries of this month’s installment.

SYSTEMIZATION

In recent issues we’ve explored architectures and design specifications for entire systems.We’ve not yet looked much at subsystem components, generally left to be defined by the system architect. To gain a bit more on the perspective of what systemization means, let’s first look at how some of the more typical disk drive arrays may be configured.

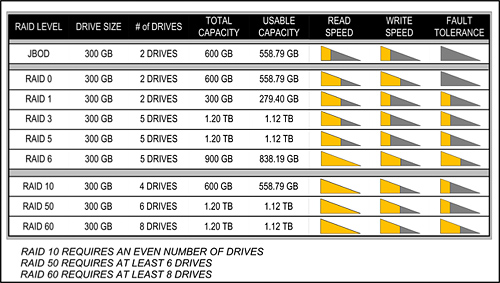

Starting with a typical RAID 5 configuration of five 300 GB drives as a striped set with parity, the total array capacity becomes 1.2 TB and the functional capacity of the striped set becomes 1.12 TB. Noting that RAID 5 requires a minimum of three drives, if the configuration is reduced to four 300 GB drives we see the total array capacity drops to 900 GB, and the usable disk space becomes 838.2 GB. In both cases, the fault tolerance is one disk, meaning that if a single drive fails, the system can rebuild; but if two fail, the system permanently crashes.

If the configuration changes to either RAID 0 (striped set) or to RAID 1 (mirrored set), the capacity and fault tolerance also changes.Using four 300 GB drives in RAID 0 results in 1.20 TB total capacity with 1.12 TB of usable space. There is no fault tolerance in this mode; if one drive fails then all the data is lost. Bandwidth (i.e., throughput) is also reduced. When using RAID 1 (mirroring) each drive size would have to be increased to 1 TB drives in order to reach similar capacity. With the maximum of two drives, each containing identical images of data; the total capacity is 1.00 TB, with a usable disk space at 0.93 TB. Doubling the drives to 2 TB each yields a usable 1.86 TB. Fault tolerance in RAID 1 is a one drive minimum.

Prior to employing dual parity arrays (i.e., RAID 6) and before storage area networks or large scale network attached storage, a common practice for storage protection was to use RAID 10 whereby two sets of RAID 0 (striped sets) are mirrored as RAID 1. Using the minimum number of four 300 GB drives, the total storage array is now 600 GB with usable capacity set at 558.8 GB. Here, the advantage of RAID 10 is a modest performance (bandwidth) increase with higher data storage protection, but at a one-third sacrifice in total usable disk space compared to straight RAID 5 (in a 4-drive configuration).

Fig. 1: Selected RAID and JBOD configurations and their respective performance and fault tolerance. In RAID 6 with four 300 GB drives, the 558.8 GB usable capacity is retained and fault tolerance rises to two drives as compared to a single drive in a RAID 5 set.In RAID 60 (two striped RAID 6 sets) using the minimum required count of eight drives allows the individual drive sizes to be reduced to 150 GB and still achieve the 558.8 GB usable capacity. Well before the introduction of high capacity drives this solution would have been construed as a cost advantage with the advantages of a double protected, multiple fault tolerant drive architecture supplemented by higher bandwidth and increased throughput. To see the perspective better, Fig. 1 describes selected RAID and JBOD configurations along with their respective performance and fault tolerance information.

Fortunately, for general users much of the math headaches are already solved by the videoserver or storage providers. However, if you’re building up your own storage solution using commercial off the shelf hardware and controllers, resolving these performance and overhead issues can rapidly become complex or confusing from both a cost and a performance perspective.

Karl Paulsen, CPBE, is a SMPTE Fellow and senior technologist at Diversified Systems.Read more about other storage topics in his recent book “Moving Media Storage Technologies.” Contact Karl at kpaulsen@divsystems.com.

The professional video industry's #1 source for news, trends and product and tech information. Sign up below.

Karl Paulsen recently retired as a CTO and has regularly contributed to TV Tech on topics related to media, networking, workflow, cloud and systemization for the media and entertainment industry. He is a SMPTE Fellow with more than 50 years of engineering and managerial experience in commercial TV and radio broadcasting. For over 25 years he has written on featured topics in TV Tech magazine—penning the magazine’s “Storage and Media Technologies” and “Cloudspotter’s Journal” columns.