DialNorm 101

The professional video industry's #1 source for news, trends and product and tech information. Sign up below.

You are now subscribed

Your newsletter sign-up was successful

Here's the deal. Alert readers know we've got audio levels problems in TV land. I've written about it, and so have lots of others. The problem is simple and obvious-audio levels vary widely from channel to channel and from time to time on any given channel, thereby unduly annoying viewers. There's more, but this is the gist of it.

How bad is the problem? I informally measured an 18 dB range over 100 channels from my friendly local cable provider (Charter Communications). Michael Guthrie of Harmonic, Inc., has measured a +/- 15 dB range. Jeffrey Riedmiller, Steve Lyman and Charles Robinson of Dolby Laboratories have measured a 16 dB range.

I think a 3 dB range would be excellent performance and 6 dB would be satisfactory, so, the ranges I've cited are, by comparison, really quite bad. Jeffrey Riedmiller thinks they are getting worse (since digital and analog services often co-exist on today's cable systems). Michael Guthrie thinks they may converge sometime in the indefinite future.

To the extent that audio matters in television, complaints about unsatisfactory loudness top the list of concerns. Why do we have this problem? The problem is at once complex and simple. It's complex in that we have a wide array of different sources, as well as a complicated and multifaceted delivery system. It's simple in that the goal-to get the loudness of all dialogue levels to sound approximately equal in the listeners' homes-is actually a fairly simple and straightforward task.

And here's where Dolby Labs enters the scene-with metadata, specifically the control signal called dialnorm (which stands for dialog normalization-you know all this, right?). Dialnorm makes it possible for us to fairly easily achieve consistent dialogue levels at the outputs of decoders. All we've got to do, collectively speaking, is understand the process, make the correct moves, and it's done. Voila!

HERE'S THE STRAIGHT SKINNY

So, what are those correct moves? What are we supposed to do? Equally important, what are we not supposed to do? What can each of us do, in our audio work, to get these things right?

First, think about actual dialogue level-that's the ongoing average level of people talking on (and off) screen. According to the Dolby specification for metadata, the target level for such dialogue within digital broadcasts is -31 dBFS Leq(A) for a decoder operating in Line mode and -20 dBFS Leq(A) for a decoder operating in RF mode. Meanwhile, the target dialogue level for analog NTSC television broadcasts is -17 dB below 100 percent modulation (25 kHz peak deviation)-don't worry, for the moment, about this apparent discrepancy-we'll talk about it next month. The reasoning behind such a target level is solid, and we won't discuss it here.

The approved way to measure such a level is, first off, to make a so-called Leq measurement (which is a power-based history of level, integrated over time) of the dialogue, A-weighted. If you don't have the gear to do an Leq measurement, you're going to have to estimate the ongoing A-weighted average (not peak) level while the talent is talking and nothing else is drowning them out. Got that? Measure the dialogue level, but not the gunshots or the car crash. What could be simpler?

Let's suppose the dialogue level you measure turns out to be -19 dBFS LeqA (which is 12 dB above the target level of -31 dBFS when the decoder is operating in Line mode). When this is encoded into Dolby Digital (AC-3), you simply enter a dialnorm value of -19 dBFS into the encoder. Assuming nobody changes that value as it travels down the distribution path (and cable headends have no method to do this), when the Dolby Digital (AC-3) is decoded at the set-top box for the consumer, the dialnorm value of -19 dBFS will instruct the set-top box to attenuate the signal by 12 dB, to -31 dBFS (our target dialogue level for Line mode operation). Voila, your dialogue is at the "correct" level, which is to say that it will be at the same level as everybody else's dialogue, at least those who also measured their dialogue levels correctly and then entered that value into the dialnorm setting.

Now, when Dolby ships one of its encoders (such as the DP569), the "default" dialnorm setting is -27 dBFS. Interestingly, when Jeffrey Riedmiller and his associates at Dolby did a study of dialnorm settings provided by various digital services available in the San Francisco bay area, they found that all the 13 digital services they studied had an indicated dialnorm value of -27 dBFS, while only one of the services had an actual dialogue level of -27 dBFS. This suggests, quite convincingly, that we are all just leaving our dialnorm settings in the default position.

And that, campers, is what not to do. Don't just leave the dialnorm setting at the default level in the forlorn hope that if you don't touch it, it won't bite you! Let me try to explain why.

In the good old analog days, when we made a tape recording, we usually indicated on the tape box the reference level used to record, as in "+3 dB re 250 nanoWebers/meter fluxivity." Remember those days? When somebody else received the tape, they read the box legend and, if they were really alert, set their levels accordingly, so that a "+3 re 250 nanoWebers/meter" level would generate a 0 VU meter deflection on their tape deck. Fine and dandy.

Now, imagine a tape box that comes pre-stamped: "+3 dB re 250 nanoWebers/meter fluxivity." Suppose you record at your beloved 185 nanoWebers/meter (oh, the nostalgia of it all!), and stuff it in the box and send it off, blithely ignoring the printed legend. Well, your tape is gonna play back 6 dB too soft (185 nanoWebers/meter is 3 dB below 250 nanoWebers/meter, which is 3 dB below the box reference).

In our brave new world, we're no longer using sine waves calibrated to mysterious magnetic fluxivities (just what is a nanoWeber, exactly?). Instead, we're using measured dialogue level calibrated to a "target level." Further, that box legend of old is now an automated control signal that sets the decoded dialogue level at the set-top box outputs for our beloved consumer. It's bad enough if you don't measure your dialogue level; it's even worse if you ignore the default dialogue level that came in the encoder and sets the level for the consumer.

Taking the earlier example, if your dialogue is actually -19 dBFS Leq(A) and the dialnorm setting is left at the default -27 dBFS, then at the set-top box outputs the signal will be attenuated by 4 dB and your dialogue will be at -23 dBFS Leq(A), 8 dB above the target level.

Does this make sense? Do you begin to get the idea?

HERE'S THE TOOLYOU NEED

So how do you cope with this? You need a way to measure dialogue level (preferably one that does LeqA). It would help if you also had a device that could tell you what the dialnorm value is for an existing digital Dolby Digital (AC-3) signal; that allows you to compare this value with the actual (i.e., measured) dialogue level to verify whether or not they are in agreement with each other. This is especially true if you are involved in a pass-through operation such as a cable headend.

Dolby makes just such a box, called the LM100. Among its features is an algorithm that identifies speech, as opposed to other content, and switches the Leq measurement on and off so that only speech is being measured. It will measure the LeqA of any digital (i.e., Dolby Digital (AC-3), Dolby E or two-channel PCM) or analog signal (baseband or RF), and it will also read the dialnorm value for any digital Dolby Digital (AC-3) or Dolby E signal. It will even generate an alarm, should you desire this, when the dialnorm and the measured LeqA of the audio diverge. Cool!

Next month we'll explore the mysteries of the set-top box a little more fully and talk about how you need to think about RF and line modes, as well as Dynamic Range Control.

Thanks for listening.

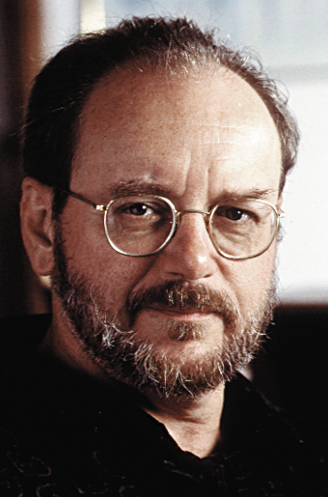

Dave Moulton would like to thank Jeffrey Riedmiller of Dolby Laboratories for his assistance with this article.

The professional video industry's #1 source for news, trends and product and tech information. Sign up below.