Storage Strategy by Application

Selecting appropriate storage components requires understanding the entire media ecosystem

Configuring an end-to-end system storage solution for a new facility can be challenging. Systems once built upon the theory that “storage tiers” were key dividing elements—today, the challenges include managing data movement between such tiers and tailoring the storage types for best uses and applications.

Storage tier assignment was a model that separated “continuously-active” storage from “not-so-active” storage, i.e., fast vs. slow or bulk storage. Legacy storage tier designs continually pushed data amongst storage tiers based upon the associated activities known at the time of the design. As storage capacity requirements increased, more drives were “thrown” at the system. Dissimilar drive capacities created differentials with performance measurement becoming unpredictive.

No longer is it practical to use a single storage element or just the cloud, alone, to support modern media production activities.

As “cloud” and other sophisticated storage architectures came into play, a different science emerged. Previously dedicated “hierarchical architectures” changed functionality to leverage software solutions which manage needs, capacities, performance, and timing. By carefully modeling with best-of-breed data storage products, new solutions could be solved using complex data activity predictions and automation. No longer is it practical to use a single storage element or just the cloud, alone, to support modern media production activities.

Managing by Type to Fit the Need

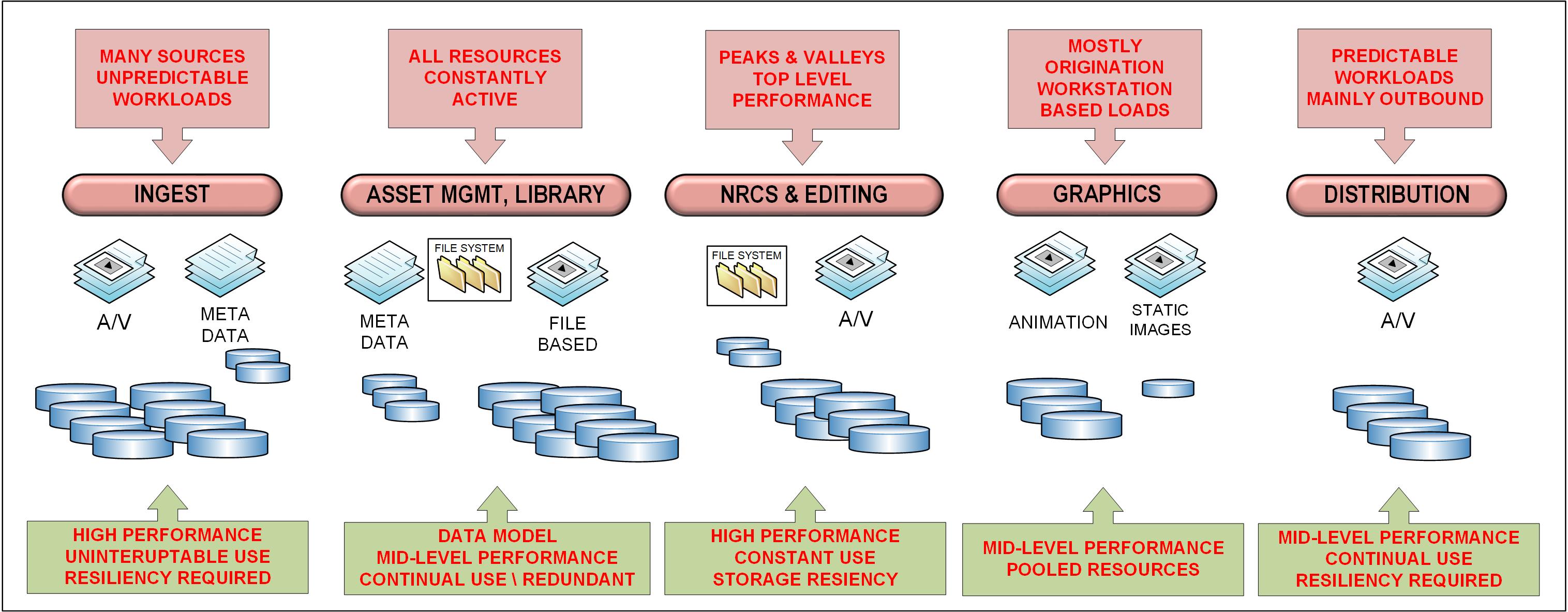

Highly sophisticated structures in today’s media asset management solutions allow storage administrators to apply variants in storage sets across groups of individual topologies and workflows (Fig. 1). Software-driven approaches coupled with fast flash memory, and other backbones (NVMe, PCIe) now allow the administrator to select workflow segments and marry them into independent systems appropriately configured for specific workflows across the enterprise.

Add in a growing proportion of media technology which is moving to the cloud, and hybridized choices open up wider possibilities amongst on-premises and cloud workflows.

Cloud supports ingest operations to funnel content from many geographic locations. Depending upon the need, placing accumulating data into a cloud platform offers a lot of extras, including long-term storage of content in its raw form plus the ability to consolidate content into a single virtualized allocation.

Knowing and defining the variety of steps to produce airable content is essential to determining if data is stored on-prem, in the cloud, or both. When and why to place which data in one or more locations vs. the efficiency or performance perspectives for specific or immediate workflows is what defines “managing by type and fitting the need.”

The professional video industry's #1 source for news, trends and product and tech information. Sign up below.

Differing Storage Structures and Elements

Organizations with varying requirements may need immediate, fast access to content at speeds incompatible with the capabilities of a “cloud-only” architecture; some workflows may not need the additional features, functionality or capabilities offered in a cloud solution.

For media organizations, a workflow might simply push “raw” content straight into editorial production. When facing a breaking or live news requirement, they may need to push the new content straight to playout while other activities (including editorial) occur in parallel.

Often workflows must support multiple parallel processes, repetitive alteration, and documentary-like preparation for later specials or features. In “live” production, raw content often needs at the very least a “technical preview” before being aired “live.” Producer approval or other reviews may also be required prior to pushing straight to air.

Proxy generation, while simultaneously moving the data from an ingest cache to playout services, could gain efficiency from differing storage platforms. While some may only need a “tops-and-tails” segmentation, others may only require a mid-sequence removal or audio overtone.

These “fast vs. slow” example workflows demand the ability to quickly take in content, transform it to an “airable” format, and assemble it for purpose necessitates fast delivery process versus lengthier or more conventional tagging, shifting into editorial cache, or moving into near term storage for other production purposes.

Attention to Metadata

Tagging or metadata association for any number of workflows or production/legal mandates are not uncommon. Metadata collection covers activities from automated tagging to scene-change detection to detailed content analysis or evaluation with respect to people, location or purpose. These variations gain additional benefits by their placement onto different types of storage depending upon the degree of effort required for each workflow.

Such differentiation by activity helps reduce load balancing and may mitigate “bottlenecking,” which drags down other processes involving multiple read/writes or transfers between processing platforms. Other methodologies, such as Kubernetes and microservices structures, are leveraging AI principles to improve performance and speed up operations.

Depending upon the workflow, data may be repositioned to fast disk arrays based on the best system approach using the available targets. Storage that can materialize the information into a particular format, managed by a MAM, and sent to the cloud or straight to air ahead of editorial may also be sought. Thus, we recognize that “not all storage is created equal.”

Monitor Proactively

High-availability storage systems need to be “watched” to ensure bottlenecks, over-provisioning, or other random events do not occur. Administrators who manage storage sets, manipulate the best data paths, and leverage file-based workflow activities proactively keep an eye on storage volumes, overall system bandwidth, and the management of those processes.

Some less intense or non-immediate activities take more time to complete their operations and would best be allocated to hours when other commands needing higher performance are more immediately addressed. For example, if the storage volumes associated with editing command more reads and writes than simple reads for transfers—move those less important activities to overnight periods when less editing occurs.

Administrators routinely must watch the logs to ensure that up-time, system loading, and security are handled proactively.

Suggestions include scheduling upgrades at times when high-availability periods are unnecessary. Establish a hierarchy of notifications and reporting to ascertain when peak performance periods need full accessibility. High-availability work-periods, if monitored and reported proactively, can support most maintenance without downtime, and can occur during a flexible and convenient time based upon a routine schedule.

Extreme Hyper-scalability

Selecting the appropriate storage components requires understanding the entire media ecosystem. Workflows vary, so storage system administrators must engage components which are sufficient in scale to handle the average workflows yet still provide for those “hyper-activities” whereby the next level of availability can be enabled as necessary. Flexibility and capacity overhead for burst-up periods need consideration throughout the various workflows.

Speed and throughput are usually expected during “peak periods” but not necessarily at all times. When a workflow pushes the envelope or approaches the edge of your storage infrastructure’s routine capabilities, your storage architecture should be expected to “spin up” to a higher level to address short-term needs. Changing when those activities occur (i.e., to “off hours”) may alleviate the potential for bottlenecks during peak periods.

Some vendors can autonomously elevate their system performance by employing “capacity module” extensions. Additional support is usually configured using flash storage in an NVMe over PCIe architecture. These modules need not be deployed throughout all storage sub-systems; and are likely relegated only to your “highest-performance-level” activities. Plan for potential needs in massive scalability, also known as “scale out and scale up”—this extra horsepower may be necessary for one-offs or major events (e.g., Super Bowl, Final Four or breaking news).

We’ve only touched the surface of modern intelligent storage management and its details. When considering an upgrade or new storage platform for a current or greenfield facility, be sure to take notice of the competitive and continual changes occurring in the storage-for-media sectors. You may be surprised how the many new on-prem storage additions are changing those capabilities which are essential to media productions.

Karl Paulsen recently retired as a CTO and has regularly contributed to TV Tech on topics related to media, networking, workflow, cloud and systemization for the media and entertainment industry. He is a SMPTE Fellow with more than 50 years of engineering and managerial experience in commercial TV and radio broadcasting. For over 25 years he has written on featured topics in TV Tech magazine—penning the magazine’s “Storage and Media Technologies” and “Cloudspotter’s Journal” columns.