Controlled Ascent: Practical Media Migration from LTO to the Cloud for Broadcast & Production

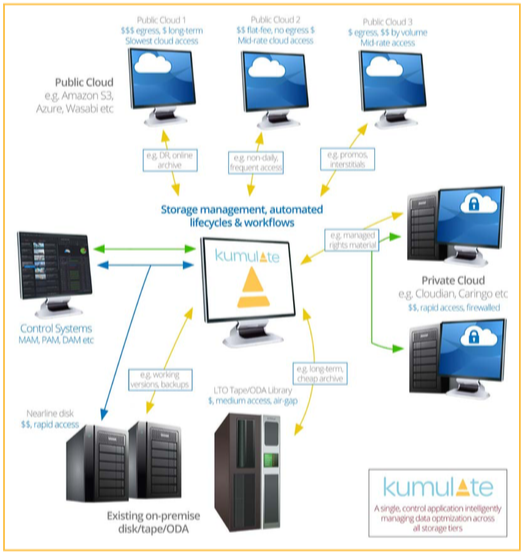

Intelligent Storage Management platforms go beyond simple management of multiple tape and disk storage

The professional video industry's #1 source for news, trends and product and tech information. Sign up below.

You are now subscribed

Your newsletter sign-up was successful

Ongoing technological advances and changes to operational environments mean that most M&E organizations have or will consider moving some or all or their on-prem content to private or public cloud storage. This involves a deep dive into the cost, features, integration, performance and time drivers that vary for every business. To start, let’s examine different types of deployment.

Public Cloud only—immediately attractive for start-ups and green-field deployments; responsive performance and distribution.

Private Cloud only—typically attractive to enterprises who generate high volumes of content and who are able to build appropriately-scaled private storage.

Public + Private Cloud—private for control, public for burst processing. Also attractive for disaster recovery and integration with external partners.

Hybrid Public/On-prem—offers a phased evolution to cloud. Hybrid is required (at least temporarily) if uninterrupted access to data is required during migration.

Multicloud hybrid—Allows content to be managed in the most cost-effective tier via intelligent storage management.

The suitability of each of these deployment models depends on the type of content you have and your specific business model. A deeper dive is required to make an appropriate choice.

The professional video industry's #1 source for news, trends and product and tech information. Sign up below.

CONSIDERATIONS

Cost and performance modelling goes far beyond the simple cost per month to store a TB of content. Content normalization, asset and metadata storage, deduplication, consolidation, proxies, AI analysis and metadata services should all be considered. And of course, cloud ingress and egress costs.

How much content and storage do you have?

Determine how many assets you currently have on premise and their specific file sizes. Count the amount of tapes and the capacity of each (not each will be full but it’s a useful guideline). Don’t forget tapes on shelves or in stores.

Can you deduplicate?

Migrating only unique content reduces cost and increases speed. If done in advance it will allow better planning of capacity and cost of cloud storage. You can dedupe on file name/size, or more accurately by comparing file size and hash.

Hardware and networks

Estimate available LTO drives and their versions. Do you have enough drives of each version to manage the migration and service day-to-day operations? You’ll also need the average time each day that a drive is available for migration duties; this may be surprisingly low.

Available bandwidth to the cloud

How much bandwidth can you get? How much can you afford? It may be faster to physically ship a NAS to a cloud provider; often, however, low availability of LTO drives means that writing to NAS is no faster than moving data over the internet.

There are some solutions to limited LTO bandwidth:

- Stop end-users driving the system. Not an option for sites continuing to send content to tape daily.

- Rent or buy additional LTO drives. Keep tape/drive compatibility in mind as you add drives.

- Rent or buy a small LTO library and drives, and manually shuffle tapes through it.

- Run migration processes at a lower priority than critical operations. This means that the migration generally takes longer.

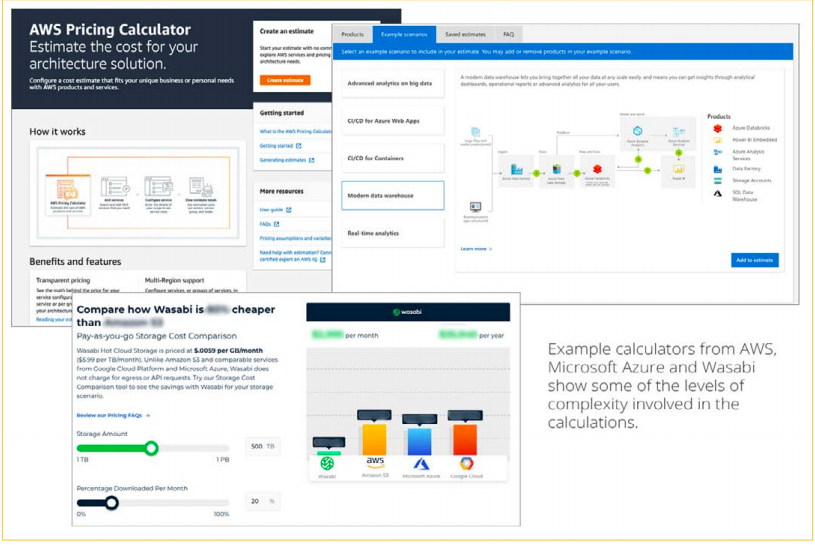

COST AND PERFORMANCE MODELLING OF PUBLIC CLOUDS

As well as the amount of content you wish to migrate to the cloud and any optional processes, you’ll also need to calculate your monthly data ingress and egress rates.

Performance models should consider the number and size of files, number of tapes, transfer speed of LTO drives, tape handling, drive availability, network bandwidth, efficiency of network object transfer processes (eg S3) and optional processes (e.g. acceleration).

Pricing models will take into account performance, storage costs and egress costs, network costs and potential hardware rental costs. Different models are required for different speeds and complexity of migrations, and developing iterations of performance and price models is important.

INTELLIGENT STORAGE MANAGEMENT

To manage migration and ongoing storage, a more sophisticated tool than the typical HSM is required to provide a seamless bridge for all integrated systems to access content during and after the migration both on-prem and in the cloud.

Intelligent Storage Management platforms (e.g. Kumulate) go beyond simple management of multiple tape and disk storage. They administer large amounts of content and metadata within a single name space and orchestrate the services and workflows through which it progresses. They manage conditional workflows and wrap, re-wrap, transcode and publish as required. This not only efficiently manages multiple storage locations and buffers MAMs, PAMs and distribution systems from storage complexity, but crucially protects content integrity over time.

ISMS AND POST-MIGRATION DATA INDEPENDENCE

Post-migration, the ISM continues to provide uninterrupted and transparent access to the content. To the user, operation continues as normal, and MAMs, PAMs or other systems can interact with normalized content and metadata without knowledge of storage, formats or wrappers.

DATA INTEGRITY AND EVOLUTION MANAGEMENT

Each time content is moved the potential for data corruption increases. Kumulate and other ISMs regularly check the integrity of files in all cloud object stores against hashes independently stored in the ISM. This ensures a bit-for-bit match throughout the content lifecycle.

The ISM also helps mitigate the inevitable technology, business, service level and pricing changes, by maintaining multiple copies of content in multiple locations. This provides customers the critical ability to avoid vendor-lock.

TURNING A CHORE INTO AN OPPORTUNITY

Moving entire or even partial repositories of content is often seen as a pain-point. But a content migration is the perfect opportunity to increase value and discoverability of your assets.

Here are some examples:

- Derive file hashes;

- Wrap edit projects and DPX sequences to increase speed and reduce egress costs;

- Create descriptive metadata;

- Transcode/re-wrap assets for normalization, new versions, or DR;

- Create copies in disparate locations;

- Generate proxies;

- Generate a time aligned speech-to-text (STT) transcript; and

- additional layers of Time Aligned Metadata:

Text/logo/facial/location/sentiment recognition, scene/event detection, motion analysis

CONCLUSIONS

Migrations of large media repositories are significant undertakings, but if you plan properly, take the time to evaluate existing resources and make sure that you have the relevant tools and management system, migrating from LTO to cloud can be smooth, disruption-free and a pathway to truly optimized content management.

The full version of this white paper is available at https://masstech.com/controlled-ascent-white-paper/.

Mike Palmer is the former CTO for Masstech.

Mike Palmer is AVP of Media Management, Sinclair Broadcast Group. Mike joined Sinclair’s Advanced Technology group in 2021. One of his key roles is selecting and integrating new media management technologies for Sinclair’s diverse business units.