Data buffering and bit rate control

The professional video industry's #1 source for news, trends and product and tech information. Sign up below.

You are now subscribed

Your newsletter sign-up was successful

Digital content primarily is delivered to a conversion device in two ways. One way is in real time, as uncompressed audio and video using SDI and AES. But as facilities and media transport evolves to file transfers over data networks, content is distributed less as uncompressed audio and video.

The other method is by using MPEG, DV or other compression formats to create content files. These files are divided into packets that can be distributed and routed over IT networks.

Real-time methods such as SDI and AES use techniques that rigidly transfer data at an uninterrupted, constant rate. Compressed content delivered as packets over an IT media network travel in bursts that are dependent on the network topology, routing protocols and the amount of other packets on the network.

Smoothing out data bursts

In order for a conversion device to operate in real time, a defined amount of data must be transferred over a given time period. Buffering smoothes out the bursty data delivered over the network. This is comparable to SDI synchronous data transfers or asynchronous network packet transfers. For media over IT networks, the required relationship between data and time is called isochronous. Isochronous means that a defined amount of data arrives in a given time period, with no specification of maximum or minimum burst size. This specification enables the design of buffers with adequate memory allocation.

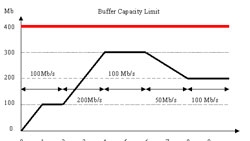

A smoothing buffer can accept bursts of packets, store them and enable access at a predictable data rate by conversion devices. Consider the scenario where a device requires a constant data rate of 100Mb/s and data can burst at 200Mb/s and 50Mb/s. As shown in Figure 1, data is initially written into a buffer at 100Mb/s for two seconds. Data read out is delayed until after one second when the buffer holds 100Mb of data. Next, two seconds of 200Mb/s data is fed into the buffer, and at the three-second mark, 300Mb will be in the buffer (400Mb have been written into the buffer, while 200Mb have been read out; add the original 100Mb, and 300Mb are left in the buffer). The variable data rates are well behaved and can be accommodated as shown.

If too much data arrives in a given time period, the buffer will overflow and packets will be lost. Figure 2 illustrates this situation. The buffer can hold 400Mb of unread (or unprocessed) data. As in Figure 1, data arrives at 100Mb/s for the first two seconds. After the first second, data read out begins. The data rate now increases to 200Mb/s, and after three more seconds, the buffer is full. During the next second, half of the data that arrives at 200Mb/s is stored and read out, and the other half is lost. If the data rate now falls to 50Mb/s, data can be read out again at 100Mb/s. And after two seconds, in this example, the data rate returns to 100Mb/s and the buffer stabilizes with 300Mb of its capacity used.

The professional video industry's #1 source for news, trends and product and tech information. Sign up below.

Figure 3 follows the same pattern for the initial three seconds as described in Figures 1 and 2. After 3 seconds, the buffer has 200Mb of data stored. Data now arrives at 50Mb/s, and after four seconds of reading out data at 100Mb/s, the buffer is empty. As data continues to arrive at 50Mb/s, the buffer can’t supply sufficient data to support a 100Mb/s read out.

In the underflow condition, a processing device will be starved for data. This makes real-time device performance (processing) impossible. Conversely, in the overflow condition, the processing device will have too much data to process and choke. Real-time processing will continue but with lowered quality, because data will be lost.

With respect to real-time HD video, network packet latency can range up to 50ms or greater, or the equivalent of at least three 60Hz HD video frames. Conversion device buffers must be able to hold the equivalent of three frames of video to operate properly.

Random, constant and deterministic

Data transfers can be broken down into three categories. The first in synchronous, where data is continuous at a given, uninterrupted rate over time. SDI is a synchronous digital signal.

Asynchronous data transfer techniques can be illustrated by Internet packet delivery that has no fixed relationship to time. A download can be virtually instant, or can crawl at peak times. The packet transfers, and subsequently the number of data bits, have no fixed relationship to a constant duration of time. This is bad for both real-time delivery and media presentation.

A technique that delivers a defined quantity of bursty data in a given time duration is called isochronous data. In this way, a device can expect a certain amount of data and needs only to be able to buffer within specified maximum and minimum amounts.

Design considerations

Buffers are used in a wide variety of devices found in a converged technology broadcast infrastructure.

Network routers and switchers buffer packets, but even a non-blocking device will drop packets if the input buffer overflows.

All computers extensively use buffering. Buffers are located between disk storage and CPUs; CPU caches are buffers as well.

Transcoding devices have input and output buffers. The input buffer smoothes data and feeds the conversion hardware the required amount. But buffering is also necessary on the device output, because data processing will not occur in a constant, repeatable fashion. The conversion engine will produce bits sporadically. So, a smoothing buffer is used to output a constant, uninterrupted real-time data stream.

Buffer design is a challenging task that must be considered in overall system design. Because buffer size cannot be infinite, data transfer rates must be carefully engineered, which explains the use of isochronous methodologies. For example, MPEG-2 systems specify a target decoder and buffer sizes for audio, video and data. This places a constraint on data delivery that must be adhered to for a device to operate without producing artifacts.

Seamless MPEG-2 transport stream splicing is an application where buffer management is critical during fade-to-black transitions. Transport stream splicing has become particularly important with the development and implementation of digital program insertion (DPI).

With an understanding of how data is fed to conversion devices, the next Transition to Digital will address trancoding, transrating and format conversion during parallel production in support of multichannel distribution.