File-Based Loudness Processors

File-based loudness processing, as one might expect, operates on audio files, either standalone, or extracted from an audio/video file such as MXF. The strength of a file-based processor is that it can gain foreknowledge about an entire audio file (or segment)—start to finish—before doing any processing. Because of this, file-based processing could potentially be less intrusive than its real-time counterparts.

Typically, file-based processing happens in multiple passes. First, the audio signal is analyzed, then—based on preset rules—the processor determines what type of functions or operations is needed and then applies them. Depending on the adjustments needed, further analysis and processing steps may occur in an iterative fashion, to arrive at the final result. While this may sound time-consuming, typical processing times are faster than real time. What kinds of measurements and processing are typical of file-based loudness processors?

DEFINED LOUDNESS TARGET

The first is fairly obvious—loudness. Adjusting the audio signal to reach a defined loudness target is fairly straightforward for file-based loudness processors. Unlike a real-time processor, which effectively rides gain throughout, a file-based processor inserts either attenuation or gain. if it’s needed, to reach the target loudness. This is done after performing a loudness measurement on the file, per ITU recommendation ITUR BS.1770-3, “Algorithms to measure audio programme loudness and true-peak audio level.”

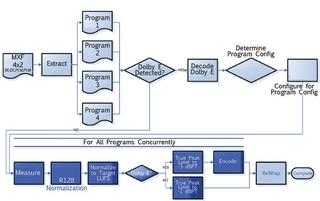

Fig. 1: Example of a file-based workflow that includes loudness measurements and adjustments, and true peak limiting for the AudioTools family of products (Courtesy of Minnetonka Audio Software, Inc.)

This single gain shift (scaling factor) doesn’t change the dynamic range. “It’s like adjusting the gain control on a fader and leaving it alone for all of the content,” said Bob Nicholas, director of international business development for Cobalt Digital in Urbana, Ill.

In addition to loudness, ITU-R BS.1770-3 describes how to measure another parameter, true peak level. Both file-based and real-time processors can provide this measurement. According to ITU-R BS.1770-3, “true-peak level is the maximum (positive or negative) value of the signal waveform in the continuous time domain; this value may be higher than the largest sample value in the 48 kHz time-sampled domain.”

If only the sampled peak value were used, problems such as inconsistent peak readings, unexpected overloads, and under-reading and beating of metered tones could occur. Again from ITU-R BS.1770-3: “The problem occurs because the actual peak values of a sampled signal usually occur between the samples rather than precisely at a sampling instant, and as such are not correctly registered by the peak-sample meter… [The] use of a true-peak indicating algorithm will allow accurate indication of the headroom between the peak level of a digital audio signal and the clipping level.”

DYNAMIC RANGE CONTROL

File-based processors can be used for other related functions as well. One example is dynamic range control (as with the AERO. file option for RadiantGrid or the Wohler loudness appliance solution). Another is measuring and controlling maximum short-term loudness, maximum momentary loudness, loudness range, and dialog level (as with Minnetonka’s AudioTools family, which includes AudioTools Server, AudioTools FOCUS and AudioTools Loudness Control for Harmonic ProMedia Carbon, for another example.)

Simply scaling an audio file, even though it meets a loudness target, may not be enough to stop viewer complaints, if dynamic range remains wider than their systems can handle, as Tim Carroll, Telos Alliance chief technology officer and Linear Acoustic founder, pointed out. That’s the reason for adding dynamic range control to loudness processors. For a theatrical release movie, for example, dynamic range may need to be narrowed to make it a better fit for TV, while a sitcom may not need much, if any, dynamic range adjustment.

File-based processors are capable of making a variety of measurements to determine how to adjust the final output to reach a predefined target. This can often be an iterative process, as one adjustment may affect another parameter. Fortunately iterative processes are well-suited to software-based products. If the target isn’t reached after a certain number of attempts, the processor can send out a notification for human intervention.

File-based loudness processing is typically just one part of an overall file-based ingest or quality control workflow that can include audio up or down-mixing, Dolby E decoding and encoding, metadata adjustment, channel assignment detection and conforming, watermarking, pitch and time control, sample rate conversion, channel management (muting, copying, reconfiguring, replacing), and third-party functions and integration, according to Oliver Masciarotte, director of customer experience at Minnetonka Audio Software.

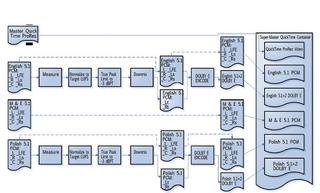

Fig. 2: Example of a more complex file-based workflow that includes multiple languages, program correlation and channel order verification, downmixing, loudness measurement, processing and peak limiting for the AudioTools family of products (Courtesy of Minnetonka Audio Software, Inc.)

As an example, the AudioTools family can integrate with Telestream Vantage and Harmonic ProMedia Carbon. File-based processing is also amenable to complex automation, according to Masciarotte.

The audio functions can occur alongside any video file-based functions such as transcoding, aspect ratio, standards conversion or quality control.

BRANCHING AND ITERATIVE WORKFLOW

Branching and iteration are keys to efficient and flexible workflows in file-based processors. Let’s look at a couple of workflow block diagrams for AudioTools to see how this works.

Referring to Fig. 1, start at the left side of the block diagram, where the audio essence is extracted from an MXF file. The audio is then checked for the presence of Dolby E. If Dolby E is detected, then the channels are first decoded to PCM before being sent to the measurement and loudness normalization stages. According to Masciarotte, loudness measurement and control, for the most part, needs to happen with PCM (pulse-code modulated) digital audio signals.

While not shown in Fig. 1, the workflow could be set up with another branch to check if the loudness meets the required target. If “yes,” the signal would continue on to the next stage, but if not, the audio channels would be subjected to further loudness level adjustments and measurements.

After the loudness adjustment sections, the workflow checks to see if the signal needs to be converted to Dolby E. Depending on the answer, different true peak limiting is applied. If encoding is required, it happens after the true peak limiter, and the signal gets re-wrapped into the MXF file.

Fig. 2 shows a more complex workflow that includes multiple languages, program correlation, channel order verification, and downmixing, in addition to loudness measurement and processing and true peak limiting.

With software-based systems, workflows such as these are defined according to each customer’s specific requirements. According to Masciarotte, these types of file-based systems can be modular to some extent, scaleable, and easily interoperable across WANs, MANs, LANs and SANs. The file to be processed can be local or remote depending on the system.

Some IT knowledge is typically needed to set these up and to ensure a good user experience.

Mary C. Gruszka is a systems design engineer, project manager, consultant and writer based in the New York metro area. She can be reached via TV Technology.

Get the TV Tech Newsletter

The professional video industry's #1 source for news, trends and product and tech information. Sign up below.