The Lip-Sync Problem That Won’t Go Away!

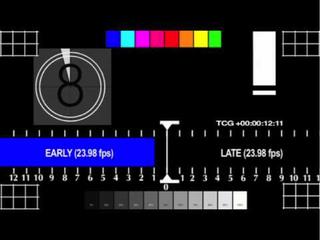

Example of a lip-sync test signal.

LOS ANGELES—So we’ve all been there, watching a TV show and the actor’s lips are moving but the words are just not coming out at the right time. Sometimes a channel change or re-boot can fix this, but sometimes not. Frustrated, you give up on that program and ask why isn’t anyone doing anything about lip-sync problems!

To begin, let’s go back in history. Before TV, there were the movies, which originally didn’t have ‘sound,’ but did have music accompaniment. When sound was introduced, the production of sound was separate from picture. To insure that the sound matched picture, the ‘clapper’ slate was developed to provide a ‘sync’ point. Still in practice today, the ‘clap’ provides a method to insure the sound and picture are together in time.

However, when TV broadcasting started, there was no post production, and thus all programs were transmitted live, with the audio and video captured in real time and transmitted to the home without any significant path delays (some delay due to modulation and perhaps a part of a video line, but nothing significant).

Videotape recording with audio became available in the late ‘50s. One of the many attributes of videotape recording is the ability to alter the video to audio timing. However, the videotape machine design goal was to keep audio and video in time, so analog videotape machines were designed for zero offset between audio and video.

Later, digital technologies were adopted by television. The first significant digital device that affected the A/V timing was the digital frame sync. Designed to align an external video signal to a television plant, there was no thought about delaying audio. At first, the video delay was one to two frames, which means the audio led the video by about 33 to 66 mS. The ITU (BT 1359) has defined acceptable range of audio-to-video offset of +50mS to -120mS (+ means audio leads video, - audio lags video). Thus, a two-frame (66mS) delay of video is detectable.

DELAYING AUDIO

The first solution was to add fixed audio delays to match the video delay (usually two-and-a-half frames of delay) so the audio would always lag video, which is more tolerable to the viewer. This situation was satisfactory until additional digital devices came into use in television production, such as Digital Video Effects (DVE). Some DVEs have four or more frames of video delay, which definitely can cause objectionable A/V sync problems, but more troubling is when the effect was inserted or removed, causing the delay to jump in and out.

This was very evident with live interviews, where the interviewee would be taken either full frame (no effect) or in a picture-in-picture box (effect). Some productions would just add fixed audio delay but have to provide un-delayed audio for the return IFB channel to the interviewee, or sometimes the audio mixer would switch between delayed and un-delayed audio depending on the state of the effect.

But these complications were ‘behind the scenes’ and did not effect the overall distribution of the program and delivery to the consumer. Consumer analog TVs did not have frame-based digital processing and thus no significant video delay.

In professional production, A/V sync was kept in line more or less. Part of the reason for this success was that the delays were generally static and manufacturers developed frame syncs with tracking audio delays that use audio re-sampling techniques to insure zero A/V delay.

MPEG

Then MPEG happened! Digital compression works to reduce the data per frame required to represent a unique picture or sequence of pictures. This works fine for video because video is a discrete time signal, i.e., there are defined gaps in time between each successive video frame. These gaps provide the decoder with the ability to de-compress the original frame and then output the full picture sequence.

But audio is a continuous time signal, there are no regular silent gaps and thus no way to catch up with the video. Audio is compressed separately from the video and the MPEG encoder has to insure the video data packets and the audio data packets are time aligned both inside the encoder and after the multiplexer (mux). The time alignment is insured by the inclusion of timestamps called Program Time Stamps (PTS) as well as Decoded Time Stamps (DTS) within the bitstream. PTS and DTS are derived from a common System Time Clock (STC) which is a 90 kHz counter related to the video sampling clock (27 MHz).

Great! What could go wrong? Well if the transmission is essentially error and jitter free, there are no problems. This is why DVD and Blu-Ray don’t generally have a lip-sync issues except if the disc is damaged. But in the real world of broadcasting, transmissions are not error free, and frequently, some data is damaged or lost. The MPEG receiver uses transmission buffers that absorb these errors, recover the STC and lock to the local sample clock.

However, the receiver has to compensate for loss in the final decoding. In the case of video loss, either the decoder jumps ahead a frame or repeats a frame of video. The audio can’t jump forward or repeat audio samples to adjust to these timing changes, without causing objectionable audio distortions or silence. If the audio data is damaged, the decoder typically will mute and the video timing can be adjusted by dropping a frame. Over time, the video frame times and the audio frame times drift, causing some mismatch between the audio timing and video timing, typically within one video frame time (33 mS).

Ideally, when the A/V delay reaches detectable limits, the decoder should reset the buffers and start, over but most decoders don’t do this as it maybe considered more objectionable to interrupt the signal flow than correcting lip sync. That’s why picking up the remote and changing the channel and back will many times fix the A/V sync problem. The only option left for the consumer device (TV, smartphone, set-top box, etc.) is to add or drop a video frame.

IT’S EVERYWHERE

The AV problem related to compression is everywhere, even on the internet. While internet video uses a different timing synchronization—Real-time Transport Protocol, or RTP, timestamps—it does have the same problem with corrupted data and variation in packet timing delivery in the form of jitter.

The final lip-sync challenge that digital has introduced is the development of flat-panel displays. These displays have intrinsic delays due to the buffering of the video data into frame memory prior to the pixel readout. While this is typically just one or two frames of delay, the accumulated A/V delay can be perceivable and perhaps objectionable.

So what has the industry been doing to fix these digital compression issues? On the professional side, many techniques have been developed and put into practice. For off-line testing, test patterns have been developed that help line up audio and video timing (e.g. VALID). But what about in-service A/V timing measurement and correction?

In the mid ’90s, Tektronix developed a technique that inserted a watermark into the video image that represented a digital sample of the audio envelope. This technique worked fine with uncompressed or lightly compressed television signals, but was not reliable when heavy video compression was employed.

SMPTE published a standard last year (ST 2064-2015) for lip-sync measurement using video and audio signatures. Signatures are metadata elements derived from the image pixel data or audio envelope via an algorithm that insures that the signature is unique to that image or audio envelope. The origin signatures are transported with the audio and video signals and at the receive end and are compared with new signatures calculated from the received audio and video. The received signatures are then compared to the origin signatures to determine the video and audio delays. The receive device can then make delay adjustments to achieve zero A/V delay.

This SMPTE method is available for professional equipment but has not been adopted for use by the consumer products. The Consumer Technology Association (aka CEA) has a recommended practice (CEA CEB20) for consumer television products that address A/V synchronization problems and offer up best practice solutions. But in the end, the consumer product is dependent on the limitations of MPEG (and RTP) timing and synchronization techniques.

Most flat-panel displays today compensate for the video delay by delaying the audio decoding internally so that the built-in speaker audio is in time with the picture. The HDMI 1.3a specification includes the ability to allow consumer displays to declare their audio/video delay and thus compensate for display delays when using an external AV receiver.

So the good news is that the industry is engaged in fixing the problem. The bad news is that no one solution has been found that can solve every aspect of the lip-sync problem.

Jim DeFilippis is CEO of TMS Consulting, Inc., in Los Angeles. He can be reached at JimD@TechnologyMadeSimple.pro. See more at his author archive.

Get the TV Tech Newsletter

The professional video industry's #1 source for news, trends and product and tech information. Sign up below.

im DeFilippis is CEO of TMS Consulting, Inc. in Los Angeles, and former EVP Digital Television Technologies and Standards for FOX.