Pushing the Limits of 3D and 4K

HONOLULU—Getting ahead of the technology curve is tough enough under the best of circumstances, let alone 37,000 feet under the sea, in a submersible only 43-inches in diameter. That was the challenge faced by reknowned explorer/producer James Cameron and his Business Partner/Technical Director Vince Pace, and by Director John Bruno and DP Jules O’Loughlin in producing “Deep Sea Challenge 3D,” a feature documentary about Cameron’s historic dive to the bottom of the Mariana Trench in the Western Pacific in collaboration with National Geographic TV. As a media technology pioneer as well, Cameron instructed his team to capture as much of the expedition in native 3D, as possible, and at the highest resolution feasible.

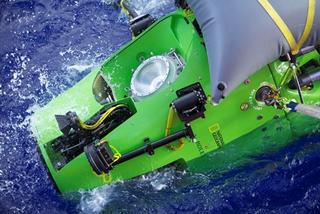

The DeepSea Challenger submersible on her first test in the ocean at Jervis Bay piloted by Jim Cameron. Photo by Mark Thiessen/National Geographic

‘HYBRID ULTRA HD’

It is worth noting that in 2012, when the expedition occurred, the practical options for capturing 4K, were far fewer than today. In fact, Red One and the Red Epic M were among the very few battle-tested 4K production cameras available. But due to the physical constraints that come with exploring a range of environments, the team couldn’t always use its Fusion 3D Rigs, with Epics, or any other camera, due to their bulk, weight (45-plus pounds) and vulnerability to ocean spray. Hence, they deployed a range of cameras of various sizes—and resolutions, from1080p to 5K—resulting in a hybrid ultra-HD production.

Since expedition planning began well before 2012, 4K cameras like Sony’s F65, F5, and F55 weren’t options for this project. Instead, Cameron’s crew used battle-tested Sony cameras like the P1 in two of their Fusion 3D rigs. For 4K and above, they started with Red Ones, then switched to the lighter, higher resolution Red Epics when they became available. The Epic was also the only “full-sized” camera deployed inside the tiny 43-inch sphere (dubbed the “DeepSea Challenger”), crammed with computers & electronics. In fact space was at such a premium, that the Epic had to be removed from the one-inch thick acrylic viewport whenever Cameron wanted a “live view” of the ocean during the dive.

Inside the compact capsule, 3D GoPro Heroes captured Cameron’s every move. Outside the mini-sub, only one camera could withstand the 16,500 PSI pressure: An ultra-compact 3D “mini cam,” designed for the dive by engineer Blake Henry, imaging specialist Adam Gobi and Cameron-Pace-Group. The “mini-cams” were built around 10 MP 1/two-third-inch Aptina CMOS 1080p sensors, and were stuffed into an ultra-compact thumb-like housing, to minimize the odds of imploding under the bone-crushing pressure.

“We protected some of the electronics gear in the sphere with oil, which is denser than air, to reduce the ‘compressible volume,” Pace said. “With visual sensors, you have to wrap some air around them, but not too much, though. Larger 4K sensors and a bulkier camera body would have been at greater risk of implosion.”

Get the TV Tech Newsletter

The professional video industry's #1 source for news, trends and product and tech information. Sign up below.

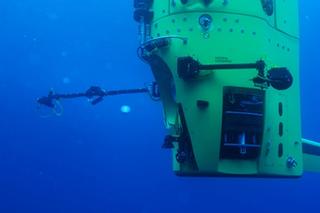

DeepSea Challenger is hoisted into the Pacific on an unmanned dive to 11,000 meters at Challenger Deep. Photo by Mark Thiessen/National Geographic

VIEW FROM THE ‘PILOT SPHERE’

Each of the mini-cams output a 10-bit 1080p/24 signal via 1.5G single mode fiber and was controlled by Cameron from the “pilot sphere.” One was mounted on the sub’s “manipulator arm” while the other was on a pan-tilt head attached to a 6-foot-plus carbon-fiber boom pole. The latter enabled Cameron to take “selfies” of the sub and provided a pair of outside eyes to examine and assess the condition of critical systems outside the sub, like the manipulator arm and lighting and ballast systems. Cameron also controlled a GoPro 3D mounted to an Israeli arm, from the viewport, early in the descent. Several 3D GoPro rigs were used at various stages of the dive.

One of the mini-cams on the hydraulic manipulator arm was mainly for wide shots, while the other, with a 90 mm macro lens, was used for closeups of sea creatures, from anemones to sea cucumbers and jellyfish, etc. It could also take 12 MP still photos and helped lead to the discovery of dozens of creatures new to science. Also critical to these discoveries and to filming in the dark ocean deep, was the 7-foot bank of 30 75W LED bricks, plus a 735W LED spotlight. Four Ty lights with high beams also helped provide nearly 100-feet of visibility.

Cameron operated the mini-cams intermittently throughout the 9-plus hour dive, which took place on March 12, 2012. “They recorded select portions of the descent and ascent in 3D, in Pro Res 4:2:2,” said Director John Bruno. “The 3D video was fed to an array of 750 GB Samurai [Atomos] recorders. A single [2D] Epic, inside the sphere, captured underwater footage via the viewport in 5K, and also within the sphere, when flipped around.” Overall, Cameron captured nearly five hours of 5K Epic footage to four custom 512 GB SSDs, during the dive, according to Bruno.

BE READY FOR THE UNEXPECTED

For underwater shots near the surface, and also topside, Bruno relied mainly on the Fusion 3D rigs with Red Epics, or with Sony P1s. He also instructed his 10-man production crew to always keep a camera at the ready to record anything interesting that might happen. “You can always tweak resolution, color etc. in post, but only if you have it in the can,” he said.

Crews conducted extensive in-water testing in Australia of the DeepSea Challenger submersible. Photo by Mark Thiessen/National Geographic

Two Fusion 3D rigs were equipped with Epics for use in a range of “dry shooting” (i.e., out of the water) situations, including some which got pretty soggy. Salt spray was a chronic problem and required regular maintenance. “One drop of water on the mirror can ruin your 3D,” O’Loughlin said. “The mirror must be clean in each eye. If not, you have to stop to clean and dry it before continuing [to shoot]. Otherwise you may end up with unuseable 3D and incur the expense of converting 2D footage from ‘the better eye’ to 3D.” He also filmed the DeepSea Challenger submersible underwater at the start, and end of the dive, with Red Epics in a Fusion 3D rig in a custom CPG (underwater) housing.

When there just wasn’t enough time to get suited up with the Easy Rig support arm and one of the Fusion 3D rigs, O’Loughlin often used Sony’s PMW-TD300, which operates much like a broadcast 2D Sony camera. “With the TD300 you can get decent 3D as long as you don’t get closer than 15 feet [to subject], O’Loughlin said. “It was quite comfortable on the shoulder and didn’t require much prep time.”

In all, more than 1,200 hours of footage was shot for Deep Sea Challenge, a two-hour documentary that which was released to U.S. movie theaters in August 2014, with more than 80 percent of it in native 3D. While less than half was captured at 4K resolution or greater, the final theatrical product is in fact 4K, albeit 2K per eye. It is worth noting that none of the GoPro footage shot with Hero 1’s and 2’s made it into the IMAX version of the film, although it was used liberally in the TV version for NatGeo TV. Unfortunately, the 4K Hero3 wasn’t ready in time for this project.

For IMAX audiences, accustomed to 65 mm film footage, ultra high-definition is clearly a must, regardless of the subject matter. “‘Deepsea Challenge 3D’ demonstrated that deep sea exploration can also drive imaging technology by pushing us to capture the highlights in 4K, and 5K, even 7 miles deep,” Pace said.