Omneon MediaGrid Technical Overview

Executive Summary

The primary goal of virtually every file-based production or broadcast workflow is to improve efficiency. Advanced media storage solutions, now capable of supporting media workflows from end-to-end, play a critical role in helping content producers and owners realize this goal.

The most significant change in media storage deployments lies in the shift toward centralized storage, which provides a single shared resource available to every application, as opposed to the conventional model in which independent storage systems serve different applications. The centralized model not only eliminates the time-consuming transfer of media between discrete storage systems, but also facilitates workflows in which multiple applications can access media concurrently. While there are many network storage solutions in the market, most do not effectively meet the demanding requirements of media workflows due to insufficient performance and lack of key media specific capabilities.

Omneon MediaGrid is an Ethernet-based network storage solution specially designed to provide users of media applications with massive bandwidth and consistently low latency without interruption or bottlenecks. Scalable bandwidth accommodates a growing volume of large media files and supports a large number of concurrent users. Low latency ensures that content can be accessed quickly and simultaneously by applications such as non-linear editors while maintaining the feel of local storage.

MediaGrid is optimized specifically for media workflows. For example, for quick-turn workflows such as News, MediaGrid offers “Active Transfers,” allowing content to be accessed while it is still being written. Dozens of leading media applications have been tested and deployed with MediaGrid.

This white paper includes technical details about how MediaGrid provides many of the world’s largest content producers and distributors a foundation for optimizing their file-based workflows.

1. Omneon MediaGrid File System Architecture and Components

The MediaGrid storage system utilizes a distributed file system designed to scale capacity from a few terabytes to multiple petabytes, and to scale bandwidth from one Gigabyte per second to tens of Gigabytes per second. It presents a single namespace to access a file system that spans many discrete hardware devices.

The MediaGrid file system contains two types of data, the actual file data and the metadata associated with the files (a description of files and their locations). File data stored on MediaGrid is further divided into slices ranging from 256KB to 8MB. Slice size is configurable by file type at a folder level or even as granular as at the level of a single file.

Figure 1 below shows the three primary components of the MediaGrid system: the File System Driver, ContentDirectors, ContentServers, and the optional ContentStore and ContentBridge. A minimum system is comprised of a pair of ContentDirectors and a ContentServer.

Fig. 1: Omneon MediaGrid Architecture Components

• File System Driver: The File System Driver (FSD) is a software agent running on one or more clients that performs the function of breaking up and reassembling files as slices are written to, or read from, a MediaGrid system. The FSD requests the set of ContentServers it can read from or write to from the ContentDirectors, opens multiple parallel connections to that set of ContentServers, and performs the transfer. This parallel access to data is unique and delivers close to the theoretical bandwidth limits of each client. The FSDs also intelligently cache data in client memory to reduce latency, and the amount of client memory used by the FSDs can be tuned. This combination of high bandwidth access with low latency not only helps large file transfers, but also high-performance editing applications that are extremely sensitive to both bandwidth and latency. FSDs are available for all primary operating systems used by applications in broadcast and media facilities such as Windows, Linux, and Mac OS X. To the application and operating system on the client, the FSD makes the MediaGrid file system appear as a single large network drive. By installing FSDs on each client that needs access to data stored on a MediaGrid system, clients have high-bandwidth and low-latency connectivity without using accelerators or gateways that may be requred in other storage systems. Clients can use single or multiple 1GbE or 10GbE interfaces to access the network.

• ContentServer: ContentServers are disk storage devices that store and serve the file slices to FSD clients. A minimum system is comprised of a pair of ContentDirectors and at least one ContentServer. The ContentServer has sixteen internal drives to store the file slices, as well as built-in redundancy with dual active-active CPU controllers. Each of these controllers has eight CPU cores, two 10GbE ports, and 12GB of memory. If there is a need for more storage capacity or bandwidth than what is provided via a single ContentServer, an expansion unit called a ContentStore can be connected to the ContentServer via redundant 6Gb SAS cables. Each SAS cable has four 6Gb lanes (24Gbps) and connects into the SAS connectors in the ContentServer controller. A maximum of five ContentStores can be connected to each ContentServer in a daisy chain fashion to serve expansion needs. The controllers in the ContentServer have sufficient processing and networking resources to serve the file slices stored in the ContentServer itself and in the five ContentStores connected to it, ensuring no bandwidth bottlenecks.

As mentioned previously, the slices comprising a single file are distributed across different ContentServers and ContentStores by the FSDs. This distribution model prevents an entire file from residing in a single ContentServer or ContentStore. There are processes called Virtual ContentServers running in the two controllers of the physical ContentServer to serve the file slices stored on the disks inside either the ContentServer or a ContentStore. There can be up to six Virtual ContentServer processes running, each representing a disk chassis (i.e., either the ContentServer or one of its five ContentStores). The six Virtual ContentServers are evenly distributed across the two controllers, and each controller is used as a primary or secondary by each Virtual ContentServer. Each Virtual ContentServer has virtual IP’s, the same number as the number of physical Ethernet ports in the ContentServer, and the FSD’s read and write via these virtual IP’s in parallel. This parallel access is one of the key drivers behind the high performance capability of the MediaGrid.

The disks inside a ContentServer or ContentStore are configured into RAID groups - either RAID 4 or RAID 6 - and the corresponding Virtual ContentServer process maps a file slice to a RAID group. So, a file slice is protected from drive failures and chassis failures by the redundant dual controller architecture present in both the ContentServer and ContentStore.

• ContentDirector: ContentDirectors are devices that provide file metadata services such as directory lookups, and in addition, they track the slices stored on various ContentServers and provide this mapping information to FSDs. Two ContentDirectors maintain constant communication and synchronization to create a high availability cluster. Each ContentDirector takes turns providing services in a round robin manner. The metadata is stored locally in each ContentDirector and is kept synchronized across ContentDirectors using a journaling scheme. Periodic checkpoints of the metadata are also taken and stored on ContentServers to help roll back to a previous state, if necessary.

• ContentBridge (Optional): The ContentBridge is an optional appliance that functions similarly to a ”head” in a traditional NAS system and provides standard network file access interfaces to MediaGrid for access from clients where it may not be feasible to install FSDs. The supported protocols are Common Internet File System (CIFS), File Transfer Protocol (FTP), Network File System (NFS) and Apple File Protocol (AFP). The ContentBridge runs the MediaGrid FSD for its own connectivity to a MediaGrid system. The standard ContentBridge appliance has two 1GbE ports on it for client connectivity, while the High Bandwidth Content Bridge (HBCB) provides 10GbE connectivity for handling a larger number of transfers. Multiple ContentBridges, or HBCBs, can be deployed to support larger number of connections or transfers allowing NAS access to scale without increasing storage capacity, assuming there is a sufficient number of ContentServers available to serve up the required bandwidth.

1.1 Omneon MediaGrid I/O Life Cycle

To illustrate the operation of an Omneon MediaGrid system, we will follow the lifecycle of an I/O. Figure 2 shows a simplified enumeration of the steps involved in reading a file from a MediaGrid system.

Fig. 2: Omneon MediaGrid File Read Steps

1. Client application makes a file open request. The FSD maps and forwards this open request to a ContentDirector.

2. The ContentDirector responds with a file handle.

3. The application read request along with the byte range is forwarded by the FSD to the ContentDirector.

Get the TV Tech Newsletter

The professional video industry's #1 source for news, trends and product and tech information. Sign up below.

4. The ContentDirector returns a map of sliceIDs belonging to the file and the list of Virtual ContentServers, to the FSD.

5. The FSD then reads the slices directly from the list of Virtual ContentServers and delivers it to the application.

Similarly, the steps required to write a file to a MediaGrid system are shown in Figure 3.

Fig. 3: Omneon MediaGrid File Write Steps

1. Client application makes a file write request. The FSD then requests and gets a list of Virtual ContentServers and SliceID’s available for writes from the ContentDirectors.

2. The FSDs write the slices to the respective Virtual ContentServers.

3. Upon receiving a write request, a Virtual ContentServer writes the slice to either a ContentServer or a ContentStore.

4.Once the slice is written to either the NVRAM or disks, the Virtual ContentServer sends a commit message to the ContentDirector and an acknowledgement to the client FSD.

1.2 Omneon MediaGrid Network Configuration and Topology

Communication between clients and a MediaGrid system (as well as inter-communication between MediaGrid components) uses an IP over Ethernet network fabric, as shown in Figure 4.

Fig. 4: Omneon MediaGrid Network Topology

The main advantage of Ethernet over other technologies is its widespread acceptance and availability. This advantage results in lower hardware costs, lower operating costs via wider familiarity by operations and support personnel, and faster innovation in the application layers.

Aggregated 1GbE or 10GbE links are used to achieve connectivity between MediaGrid and the existing network. No special host adapters are required on any client device, as all access to the system is via standard Ethernet connectivity. This allows the Omneon MediaGrid system to provide the connectivity and performance required and to seamlessly integrate into existing environments.

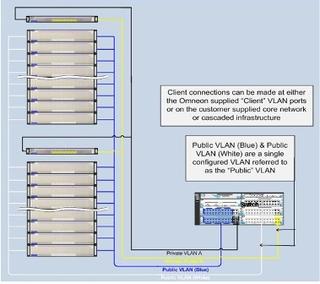

Networking within the MediaGrid is comprised of three subnets/VLANs; two private and one public. The ContentDirectors connect to each other through two private VLANs for redundancy, while the public VLAN is used to connect to the clients and Omneon MediaGrid ContentServers. Each ContentDirector has four 1GbE interfaces. Two are used for the two private VLANs and the other two for the public VLAN. The ContentDirectors provide a resilient DHCP service for the management of the public VLAN, including the assignment of IP addresses to ContentServers and ContentBridges. The ContentServers have multiple 1GbE interfaces or optical 10GbE interfaces, depending on the type of ContentServer, while the ContentBridges have two 1GbE interfaces, or a single 10GbE interface in the case of the High Bandwidth ContentBridge (HBCB). These interfaces are configured as part of the public VLAN. Sufficient networking ports on qualified switches must be allocated for both internal MediaGrid and external client communications.

Client connectivity is provided by way of additional VLANs, often referred to as the Client VLANs. The connectivity to the MediaGrid is achieved via a Layer 3 VLAN hop between the Client VLAN and the Omneon MediaGrid public VLAN. This separation ensures that the MediaGrid only processes essential client and inter-component traffic, and that no external traffic inhibits overall performance.

2.0 Performance and Capacity Scaling

The distributed design of MediaGrid combined with FSD-enabled parallel access paths to clients provides the ability to start with a small configuration and then scale performance to tens of gigabytes per second, and to scale capacity to multiple petabytes. The unique benefits of the MediaGrid approach to scaling are:

• Pay-as-you-go expansion: This ability to start small and scale in small increments (as small as one ContentServer or one ContentStore at a time) as your business needs grow enables just-in-time upgrades and avoids locking up capital in storage that may not be immediately used.

• Investment protection: The ability to scale storage capacity and I/O bandwidth protects the initial investment without having to do “forklift” upgrades to achieve higher levels of performance or capacity.

• Non-disruptive capacity expansion: Online expansion provides the ability to expand storage without interrupting clients reading from, and writing to, the file system. Simply add new ContentServers or ContentStores to expand, and the new space is automatically recognized by the MediaGrid system in less than a minute.

• Increased performance for existing and new data: Existing data on an expanded MediaGrid is automatically rebalanced across newly added ContentServers or ContentStores, providing higher read bandwidth access even for existing data. Any new data written to a MediaGrid system after the expansion is written and distributed across all ContentServers and ContentStores, both old and new.

• Leveraging latest generation ContentServers and disk drives: Every year, advances are made in server hardware and disk drive technology. Customers can utilize the latest server hardware and disk drives for capacity expansion, allowing users to invest in the latest technology and not be locked into using legacy offerings.

• Shared bandwidth across applications: By having a single volume and name space, all applications and users share in the additional capacity and bandwidth, eliminating issues associated with the traditional approach of allocating additional storage to a specific application or user.

3.0 Data Protection and Recovery

MediaGrid is designed for high availability, with an array of hardware and software features to protect against component failures.

3.1 Protection from disk drive failures using Software RAID

Omneon MediaGrid protects data and its availability from drive failures with a very efficient Software RAID implementation. MediaGrid supports two RAID types: RAID 4 (Single Parity) and RAID 6 (Dual Parity). RAID 4 uses two data disks and one parity disk in a (2+1) RAID group, while RAID 6 uses six data disks and two parity disks (6+2). With these flexible RAID options, the sixteen drives inside a ContentServer or a ContentStore can be configured as either:

Two groups of (6+2) in RAID 6, or

Five groups of (2+1) + 1 spare drive in RAID 4

With RAID 6, when a failed drive is replaced, automatic rebuilds are initiated in the background. In the case of a drive failure in a RAID 4 configuration, the spare drive is utilized for rebuilds. The rebuilds take place at a lower priority relative to client I/O and do not significantly impact performance.

3.2 Protection from ContentServer hardware failures using redundant components

ContentServers and Contentstores each include two hot swappable controllers and two hot-swappable power supplies. In normal operation, both controllers are actively serving data. In the event of a controller failure, clients automatically failover to the remaining active controller. The failed controller can then be replaced while the system is online, and I/O can be rebalanced between the two controllers to return to a normal operating mode. In the event of a power supply failure, the second power supply can handle all the power needs until the failed power supply is replaced, again without interrupting service.

3.4 Recovery from ContentDirector Failures

ContentDirectors are in an active/active cluster configuration with regular communication between them. If a ContentDirector fails, the impact is minimal as the redundant active ContentDirectors continue to provide metadata services. Any replacement ContentDirector connects to the cluster, identifies itself and requests a file system update. This update populates the new ContentDirector with the current state of the MediaGrid cluster and file system metadata. Content Directors also include two disk drives in a mirrored configuration, so the loss of a single drive does not take down a ContentDirector. As an added safeguard, checkpoints of the metadata in ContentDirectors are taken at regular intervals and written to the ContentServers.

4.0 Authentication and Access Control

Both ContentServers and ContentDirectors act primarily as servers, processing requests from clients and returning results although they do communicate with each other. In view of this, MediaGrid supports comprehensive authentication and access control capabilities to ensure that only authorized clients can submit requests, and that they can only submit approved requests.

Omneon MediaGrid supports both Active Directory (AD) and Domain Controller (DC) for authentication for Windows clients. For Linux clients, Lightweight Directory Access Protocol (LDAP) is supported. For Macintosh clients, Active Directory, LDAP and Apple Open Directory are all supported.

The Omneon ContentManager (a Microsoft Windows based application) allows administrators to set user-level and group-level security for the various MediaGrid files and directories by using Access Control Lists (ACLs). File access by users is checked against these ACLs for each file.

The Omneon MediaGrid system receives User and Group IDs when it joins a directory server such as a domain controller or active directory system, and is stored in the ContentDirectors. ContentManager receives the ACLs when it mounts an Omneon MediaGrid file system, and contacts the domain controller or directory server to resolve user names.

5.0 Media Optimized

Traditional IT-focused storage systems treat media data no differently than any other type of data. This approach fails to appreciate that digital media files are a highly specialized file type, and limits their ability to effectively support media applications. Omneon MediaGrid is media aware, i.e., it knows that the data being stored is media. MediaGrid makes use of the Omneon Media API, which gives it knowledge of related files—video and audio tracks for example—and the ability to construct coherent media files while recording is still in progress.

Omneon MediaGrid also has the ability to tune read and write performance based on the media file type. For example, the system can automatically segment relatively small AIFF audio files into 256KB slices, while Apple ProRes 422 video files would be segmented into 4 MB slices. These files might be associated with the same piece of content.

6.0 Omneon MediaGrid vs. Alternative File Storage Architectures

Across the industry there are several different approaches to file storage for media workflows.

6.1 Direct Attached Storage

Direct Attached Storage (DAS) is a common approach whereby file storage is provided by disks captive to one specific server. This approach can appear to be economical, as adding disks to a server is relatively inexpensive. However, the hidden costs of DAS can be enormous, as data must be replicated (often numerous times) in order to “share” it; there can be significant reliability issues associated with DAS; expanding storage capacity is often disruptive and there may be problematic limitations on maximum configuration size; and DAS results in a highly inflexible infrastructure that is problematic to adapt to change.

Given the limitations of DAS, many organizations that do significant handling of digital media choose to utilize shared central storage, which generally falls into the categories of clustered NAS systems or SAN file systems.

6.2 Clustered NAS Systems

A clustered NAS (Network Attached Storage) system uses a distributed file system running simultaneously on multiple servers connected via an Ethernet and/or Infiniband network. The key difference between clustered and traditional NAS is the ability to distribute (“stripe”) data and metadata across the cluster nodes or storage devices. Clustered NAS typically provides unified access to files from any of the cluster nodes via a global name space.

The key differences between Omneon MediaGrid and clustered NAS solutions are in the area of throughput and latency. As discussed earlier, with Omneon MediaGrid metadata requests to ContentDirectors are handled out-of-band via file data access. Once a ContentDirector forwards a list of ContentServers storing file slices for the pertinent file, the client requests the file slices via the FSD driver directly and in parallel to optimize latency and throughput.

In comparison, in clustered NAS systems, metadata requests are handled in-band. Each node in the cluster is responsible for providing both metadata and data services to clients and each client request to the storage system involves resolving metadata requests which in turn increases overall latency. In addition, each cluster node is responsible for satisfying file data access requests, which include communicating with other nodes in the cluster to aggregate data prior to responding to a client. This indirect data hop negatively impacts overall bandwidth and latency for file access.

6.3 SAN File Systems

A shared disk SAN file system uses a fibre channel storage area network (FC SAN) to provide direct disk access from multiple computers at the block level. Clients must use fibre channel Host Bus Adapters (HBA) to translate from application-generated file-level requests into block-level operations used by the SAN.

What differentiates MediaGrid from SAN file systems is simplicity and total cost of ownership. The use of an all-Ethernet infrastructure by Omneon MediaGrid lowers overall cost due to the broad ecosystem of Ethernet equipment suppliers. In addition, Omneon MediaGrid can leverage a breadth of client network interface options ranging from built-in Ethernet ports on the client system to optional 10GbE add-in interface cards.

In comparison, SAN file systems require expensive fibre channel SAN infrastructure, including switches and HBAs. These additional hardware components increase the points of failure and negatively impact overall reliability. In addition, FC SAN tools and administrators with FC SAN management skills are relatively expensive, which results in higher operational expenses for FC SAN infrastructure.

Beyond equipment cost, Ethernet infrastructure is easier and more efficient to manage from a cost standpoint due to the range of tools available as well as the vast number of network administrators who are familiar with Ethernet.

7.0 Conclusion

The Omneon MediaGrid system is a clustered storage solution designed specifically for file-based digital media workflows. Its industry leading performance and scalability combined with built-in media intelligence allows it to play a central role as the active hub in media production workflows.