Cloud Performance—Noisy Neighbors And Bare-Metal Servers

Al Kovalick

Utility computing and job performance are at odds. On one hand, the lure of utility cloud resources including compute, storage and networking is appealing for a number of reasons previously discussed in this column. On the other hand, utility implies resource-sharing and this in turn impacts the ability to guarantee a desired performance level during the lifetime of a workload. Performance metrics include latency, CPU utilization, I/O, storage IOPS, and repeatability over time-of-day/month. Performance may be measured using benchmarking software such as Geek-bench 3 from Primate Labs.

Some workloads are not performance-critical. Take for example an inventory-tracking web app. If the UI response is similar to a local desktop app then users won’t complain. But a latency sensitive, real-time AV encoding service will require a much higher sustained performance level. So, bottom line, system designers want to match the performance to the workload. What choices are available? Let’s look at the landscape offered by generic cloud computing.

INFRASTRUCTURE AS A SERVICE

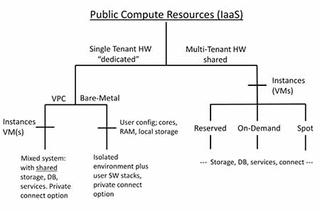

Fig. 1 First, users will achieve the most sustained performance from a strictly private cloud. Users own all the resources and can control and allocate performance to workloads as needed. But what is achievable when using the public cloud? Fig. 1 shows the landscape of public cloud choices related to performance.

Infrastructure-as-a-Service (IaaS) is the most general case to consider. It can be divided into two main domains; multi-tenant and single-tenant.

Multi-tenant refers to disparate users sharing the same underlying compute and local storage resources. For example, assume four different external users sharing the same server CPU to each run a single app. Each app thinks it owns the CPU but in fact they share it. Sharing is realized by using virtual instances for each user app. An instance is a software environment that enables apps to run in isolation on the same hardware. This is also called a “virtual machine” or VM and partitions a single data server to act as multiple servers. If one instance crashes, the others are not affected. Virtual machines are the basis of most cloud computing and they enable easy install, migration, run and removal of instances/apps. To many observers, the VM ignited the cloud revolution.

Multi-tenant resources are often sold as reserved, on-demand or spot. These levels don’t relate to performance directly but rather to pricing. For example, a reserved instance is best used for long term commitment (typically, > 1 year) while on-demand is great for less persistent workloads such as code testing and other intermittent needs. Spot instances are priced based on excess compute supply and prices vary widely depending on system usage. Spot instances are fickle so beware; if the shoe fits, wear it.

Get the TV Tech Newsletter

The professional video industry's #1 source for news, trends and product and tech information. Sign up below.

Users consuming resources have been likened to noisy neighbors. Ideally, apps run in perfect isolation but the needs of other tenants create “performance noise” per instance. Long-term storage and networking resources are also shared in multi-tenant, so overall app/service performance metrics can deviate by a factor of two or three during a given time-of-day/month. So, what strategies can reduce the noisy from neighbors?

DEDICATED RESOURCES

In Fig. 1, the left side outlines two common practices for accessing “dedicated” resources from a public provider: virtual private cloud (VPC) and the so-called bare-metal cloud. Both improve performance but use different methods. For the VPC case, isolation between users comes from using private IP subnets (such as VLANs, VXLANs or similar) and dedicated instances running on servers that are committed to a single user (or account). So, the noisy neighbor problem is reduced but not eliminated completely. Why?

Most VPC systems still use common persistent storage, networked routers, database services and such. So to the extent that an application uses these other resources, performance will be impacted. The VPC road can be used to create a seamless integration between a local facility data center and the cloud for many practical workloads. Available too are direct connect access paths (10 Gbps, pay by the bit), that bypass the Internet for the ultimate in security, access rates and QoS.

The second choice is termed bare-metal. This is just a “naked server,” in the context of this article, with RAM, CPU cores and local storage (~150 TB max in 2014) specified by the user. Bare-metal cloud servers don’t require a hypervisor layer. Workloads are deployed on servers with a preconfigured OS but no other cloud provider software is added. Users define the software stack above the OS. These servers are managed via a management platform that provides for deployment, capacity and usage monitoring. Servers can be deployed quickly (but not as fast as a VM), and billed per hour or per month. As with the VPC, private networking and Internet bypass access are available.

Bare-metal servers offer the ultimate in performance from a public cloud provider. The user controls the entire software stack and there is no hypervisor tax. This can be as much as 10 percent due to the VM layer in a virtualized environment. Of course, if user apps require cloud-based persistent storage or database engines, there will be elements of resource sharing. Even in the enterprise, resources are shared; think SAN or LAN. Bare-metal servers command a higher price per unit of compute compared to their garden variety virtualized cousins. There is no free lunch; if you need performance, expect to pay for it. Still, the current bare-metal offerings from the likes of IBM/SoftLayer, Internap and Rackspace are starting to gain traction after some users soured on noisy neighbors.

What does all this mean for the media facility? Most migrations to the cloud start with an inventory of existing apps and services. Analysis should include assigning a performance metric to each app/service. Only then can you make an intelligent decision regarding the choice of a single or multi-tenant public environment or a private roll-it-yourself system at home.

Al Kovalick is the founder of Media Systems Consulting in Silicon Valley. He is the author of “Video Systems in an IT Environment (2nd ed).” He is a frequent speaker at industry events and a SMPTE Fellow. For a complete bio and contact information, visitwww.theAVITbook.com.