Applied Technology: Captioning Made Easy (and Affordable)

With government mandates, closed captioning has never been an easy task for a broadcaster or Internet video service provider. The challenge has always been about reducing cost and speeding up the process to minimize its effect on the production chain.

Basically, captions can be inserted during or before a telecast. Inserting live captions during a broadcast is usually done by well-trained operators using a dictation engine that results in higher costs, a high degree of errors. Here we’d like to focus on inserting captions before a broadcast. This also applies to most Internet videos as well.

Adding the captions before the broadcast involves creating a timed text transcription, that is, outsourcing a captioning vendor to download your video, transcribe and add timecode to it, and then deliver it back to you. Currently, there are a wide variety of software packages that can do this – some free, some not.

Once you have a timed script, there are about three ways to insert captions.

The first method is to encode it into your video during post-production. Software packages like MacCaption generate closed caption streams that you can import to your Final Cut Pro sequence, which you can then print to tape or master out to a file. This involves time-consuming encoding steps, but ensures a tight binding of your captions with the video.

The second way is to insert captions on-the-fly during the broadcast. This requires a caption transmission server that communicates with your video server and scheduling system to call up the timed caption file of the program prior to broadcast and trigger them in real time, based on timecode information striped to the transmitted video. While this offers fast turnaround and flexibility, it also can cause frequent errors due to the wrong metadata or striped timecodes being used.

The third and easiest way is directed more at Internet or offline video. This involves converting the timed text into a specific captioning file format that your video hosting or offline video authoring system supports – like SMI or SRT.

i-Yuno has spent the last 12 years providing subtitling and captioning services to the most high-profile multinational broadcasters and we understand the full eco-system well. The company has dedicated itself to developing technologies that can enhance such requirements and has come up with two new revolutionary captioning concepts – cloud and digital fingerprints.

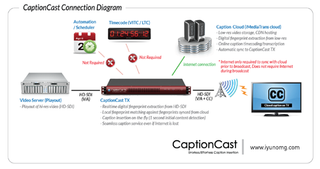

For broadcast linear TV channels, the combination of i-Yuno’s caption services, CaptionCast (consisting of a hardware-based caption inserter) and iMediaTrans (a cloud-based captioning service) offer a seamless, highly efficient and totally automated captioning/subtitling workflow.

First, CaptionCast caption inserters (servers) are installed at your facility between any transmission point where video is streamed over an SDI infrastructure. Next, you upload your video to the iMediaTrans cloud securely, and that’s all you need to do. i-Yuno’s captioning team is immediately notified and starts working on the transcription and timecode stamping. During this process, a full-length audio digital fingerprint of your video is generated in the background. Digital fingerprints are very small binary data nits that are used to uniquely identify contents (and is not voice recognition).

Once this is complete, the timed texts along with its matching fingerprint are synchronized to the CaptionCast inserters inside your facility via low-bandwidth Internet (caption files and digital fingerprints are very small in size). CaptionCast then extracts 1-2 second buffers of fingerprints of the incoming SDI and compares that with thousands of video fingerprints stored locally. This process does not involve an Internet connection. Once it matches a piece of content, it streams out the related captions on-the-fly, without human intervention.

This is a revolutionary workflow as it gives customers the freedom to focus more on other core broadcast operations and less on captioning. Uploading to the cloud is all the customer has to do. It requires no integration with existing scheduling systems and uses the audio itself instead of metadata or video striped timecodes. The iMediaTrans Cloud hosts video securely with CDN acceleration so that customers can access the video with captions anytime, anywhere. Customers can also export from the cloud to various captioning file formats for repurposing.

Another interesting part of the cloud is iMediaTrans Automatic Recut. Many customers these days repurpose their content after the first airing. In some workflows this involves the re-time coding (or recutting) of the captions, which is typically all done manually. For linear TV, the CaptionCast system continuously detects the incoming stream every 1 second and immediately syncs the captions against any video edit. However, the technology is also offered to non-linear videos via iMediaTrans Recut. By simply uploading the original video, its matching timed captions in any format, and an edited version of the original video, the technology outputs a re-timecoded, accurately timed caption file in minutes. This is all provided from the iMediaTrans Cloud.

Additionally, the cloud also provides an API that provides WebVTT subtitles or caption formats to any OTT device. This means rather than having to upload your captions to the video hosting service manually, you can develop your OTT player to access the captions directly from the iMediaTrans Cloud. This frees your OTT operations of any caption-related efforts and is much more efficient in servicing multiple OTT platforms with the same content.

Whether your facility is a small TV station, a single channel or a multi-channel provider that also supports Internet video services, utilizing the iMediaTrans Cloud as a repository for all of your captions or subtitles and then deploying CaptionCast inserters in all of your channels and exporting various re-timecoded caption files for Internet or offline makes the captioning process fast and easier than ever. This highly reliable technology is offered at very affordable prices – with free H/W and S/W usage for customers signing up with i-Yuno to a certain amount of captioning services per year.

Author’s bio: David Lee is President of i-Yuno Media Group Americas, based in Burbank, California. Lee is a long-time veteran of captioning technology design and developing new ways to solve an age-old problem.

Sponsored Content

Get the TV Tech Newsletter

The professional video industry's #1 source for news, trends and product and tech information. Sign up below.