AV Fingerprinting Helps Detect Lip-Sync Errors

The industry seems to be making some progress in the detection and correction of lip sync errors, as evident through sessions and presentations at the recent AES convention, IEEE BTS Symposium, SMPTE Technical Conference, and ATSC seminar on loudness.

Making accurate in-service measurements on a variety of dynamic program material as it's being played out and aired has been tricky. Emerging audio/video fingerprinting technology may hold the key to a broad range of solutions.

ANALYZING THE DATA

In general for this application, fingerprinting or correlation techniques involve comparing two signals, a reference source known to have no lip-sync error, and a second signal somewhere down the signal chain.

The second signal can actually be picked up at many different places along the signal chain, to the final output of master control, continuing to an off-air, off-cable, off-satellite, or off-fiber feed using a professional integrated receiver decoder (IRD) or consumer set-top box.

This way each subsystem can be checked for lip-sync errors, which would do much to prevent accumulation of errors as a signal passes from one sub-system to the next.

Starting with the known good source, a fingerprinting algorithm analyzes and extracts its characteristics, fingerprint, correlation data or A/V signature (depending on which company you're talking to) from both the audio and the video.

The fingerprint data is unique for each frame of video and a specified block of time for audio. Depending on the system making the fingerprinting measurements, audio can be analyzed channel by channel, as a group like stereo or 5.1, or by selected channels.

Farther down the signal chain, a destination signal receives this analysis.

If the destination signal is fed into the same analyzer as the source, then the correlation data is compared internally. The comparison can generate certain results such as verifying that the two signals indeed have the same content, the delay from source to destination, and the relative delay between audio and video (lip-sync error).

If the source and destination signals are physically distant, they each require a fingerprint analyzer at each location. The correlation data, generally a low bit-rate data stream, then needs to travel from the source to the destination in some manner, such as an IP path over LAN or WAN or a satellite data link. In the systems available now or in research, the timing of the path for the correlation data isn't critical.

If a lip-sync error is detected between source and destination, the system detecting the error can do one of two things: alert an operator via some means that an error exists so the operator can choose how to correct it; or the system can control some equipment to make automatic delay corrections in either the audio or video, whichever needs it.

With fingerprinting technology, the source or destination signal itself isn't changed, unlike with some watermark techniques where timing codes are inserted into the source video.

Another advantage of fingerprinting is that the correlation data is derived from the content itself, not the format of a signal. This allows comparison between an HD 5.1 surround sound signal leaving master control to an NTSC stereo signal from a consumer set-top box.

A potential disadvantage of fingerprinting, at least as currently implemented, is that the algorithms are proprietary for each manufacturer, with patents or patents pending. That means that a correlation data stream from one manufacturer's product is not compatible with another's fingerprinting analyzer engine. This current lack of interoperability could impede widespread implementation. However, SMPTE has heard and answered the call for standards in this area, with the SMPTE 22TV Lip Sync Ad Hoc Group taking on the task.

FINGERPRINTING AVAILABLE NOW

Fingerprinting technology isn't just theory. It's in practice.

Miranda Technologies has a card in its Densite modular series, HLP-1801, that performs lip-sync error measurements on two signals, a known source and an unknown destination signal.

"The card has two independent fingerprint generator engines and analysis is done on both signals at the same time," said Marco Lopez, senior vice president of Interfacing at Miranda. "Fingerprinting is done on a field-by-field basis, generating a number that is a unique fingerprint for what the video or audio content is for that field."

The first thing the analyzer checks is that the content is the same for both the source and destination. If it is, it then proceeds to check for lip-sync errors. (If the content is different, there's no point in proceeding with further analysis.)

The HLP-1801 can be tied in with a Miranda iControl SNMP-based multichannel play-out monitoring system, which can trap error indications and perform some action like alerting an operator, or making a delay adjustment on another Miranda processing card. The HLP-1801 analyzes up to 16 audio channels.

"Every channel gets its own fingerprint. That way we can measure any phase shift between channels," Lopez said. "Accuracy of the audio measurement is [+/–] one millisecond."

The card can also be used for audio or video presence detection, among other functions.

Within the next six months, Miranda expects to launch a version of iControl that supports multipoint, multisite lip-sync monitoring, allowing a network, for example, to monitor return feeds from many affiliates.

The Evertz IntelliTrak program video and audio lip-sync analyzer time slices the audio and video signals, "and does IntelliTrak math and generates correlation data," said Tony Zare, product manager at Evertz. "It looks for characteristics about audio and video content and generates a number that represents a video frame and audio frame in time. From that information the IntelliTrak algorithm then determines delays through the signal chain. This is accomplished without any watermarking of the video and audio, thereby is referred to as a completely non-intrusive system."

IntelliTrak can treat the audio in different selectable ways. It can analyze 5.1 surround as a single entity, or look at discrete channels where it compares left with left, right with right, for example, and generate lip-sync errors for each.

The system can also compare a Dolby E signal with another Dolby E, and AC-3 with AC-3. According to Zare, IntelliTrak has a measurement accuracy of less than one millisecond.

One application for the technology is to use a facility routing switcher to select two signals into an IntelliTrak device to measure the lip-sync error between two points. Another application is to use IntelliTrak at a mobile truck venue to generate correlation data right at the source, and then transmit the data to a facility via an IP link.

IntelliTrak, developed from the company's own research and development group, is built as a software core that can be incorporated in a portfolio of Evertz products, like frame synchronizers, distribution amplifiers routing switchers, and multi-image display systems.

Every IntelliTrak module has an SNMP trap, according to Zare, so it can be tied into a monitoring system like the Evertz VistaLINK network management system for notification or correction of lip-sync errors.

If an error is detected, what to do?

"If you want to use the system to make a quick fix [automatic correction], the system will do it, but that's not the real solution," Zare said. "The real power is to use it to pinpoint where the offending problem is." Zare said that he's done demos in facilities where there were no perceived lip-sync errors, yet IntelliTrak found them.

Dolby has been involved in researching fingerprinting technology the last four years or so. No products yet, but a report on "Detection and Correction of Lip-Sync Errors Using Audio and Video Fingerprints" and some experimental test results was presented at the SMPTE Technical Conference by Kent Terry and Regunathan Radhakrishnan of Dolby Laboratories.

The technology described in the paper is designed to work with the typical kinds of signal processing, like MPEG compression, aspect ratio converters, and sample rate converters, that are used in a normal broadcast chain, but not for the more extensive processing done in production. It can also be applied to file-based systems.

As typical of fingerprinting systems for this application, the technology compares two signals.

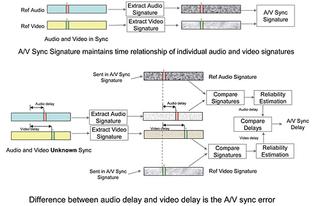

Fig. 1 ©Courtesy Dolby Laboratories The first step is to "measure and generate an audio video synchronization signature from a point where you have correct synchronization," said Kent Terry, senior staff engineer, Dolby Laboratories. "From then on, measure at points downstream and meter or correct [any lip sync errors]." The system as described by Dolby has a lip-sync accuracy of +/– 10 milliseconds.

The A/V sync signature is a representation of features extracted from audio and video content and needs to be transmitted in some way from the reference signal analyzer to the downstream signal analyzer (see Fig. 1). An IP link will do. But the signature data doesn't have to be attached directly to the audio or video content, one of its advantages.

The technology as presented by Dolby also incorporates a means of predicting how reliable the lip-sync error number is that is generated.

As fingerprinting technology develops and is implemented, and as standards for interoperability are written and adhered to, the future looks brighter for solving those annoying lip-sync problems. And yet, such technology shouldn't be considered a substitute for good system design, maintaining correct MPEG timestamps, paying attention to details and closely monitoring signals.

Mary C. Gruszka is a systems design engineer, project manager, consultant and writer based in the New York metro area. She can be reached via TV Technology.

Get the TV Tech Newsletter

The professional video industry's #1 source for news, trends and product and tech information. Sign up below.